1. Introduction

This specification defines the Immersive Audio Model and Formats (IAMF) to provide an Immersive Audio experience to end-users.

IAMF is used to provide Immersive Audio content for presentation on a wide range of devices in both streaming and offline applications. These applications include internet audio streaming, multicasting/broadcasting services, file download, gaming, communication, virtual and augmented reality, and others. In these applications, audio may be played back on a wide range of devices, e.g., headphones, mobile phones, tablets, TVs, sound bars, home theater systems, and big screens.

Here are some typical IAMF use cases and examples of how to instantiate the model for the use cases.

-

UC1: One Audio Element (e.g., 3.1.2ch or First Order Ambisonics (FOA)) is delivered to a big-screen TV (in a home) or a mobile device through a unicast network. It is rendered to a loudspeaker layout (e.g., 3.1.2ch) or headphones with loudness normalization, and is played back on loudspeakers built into the big-screen TV or headphones connected to the mobile device, respectively.

-

UC2: Two Audio Elements (e.g., 5.1.2ch and Stereo) are delivered to a big-screen TV through a unicast network. Both are rendered to the same loudspeaker layout built into the big-screen TV and are mixed. After applying loudness normalization appropriate to the home environment, the Rendered Mix Presentation is played back on the loudspeakers.

-

UC3: Two Audio Elements (e.g., FOA and Non-diegetic Stereo) are delivered to a mobile device through a unicast network. FOA is rendered to Binaural (or Stereo) and Non-diegetic is rendered to Stereo. After mixing them, it is processed with loudness normalization and is played back on headphones through the mobile device.

-

UC4: Four Audio Elements for multi-language service (e.g., 5.1.2ch and 3 different Stereo dialogues, one for English, the second for Spanish, and the third for Korean) are delivered to an end-user device through a unicast network. The end-user (or the device) selects his preferred language so that 5.1.2ch and the Stereo dialogue associated with the language are rendered to the same loudspeaker layout and are mixed. After applying loudness normalization appropriate to its environment, the Rendered Mix Presentation is played back on the loudspeakers.

Example 1: UC1 with 3D audio signal = 3.1.2ch.

-

Audio Substream: The Left (L) and Right (R) channels are coded as one audio stream, the Left top front (Ltf) and Right top front (Rtf) channels as one audio stream, the Centre channel as one audio stream, and the Low-Frequency Effects (LFE) channel as one audio stream.

-

Audio Element (3.1.2ch): Consists of 4 Audio Substreams which are grouped into one Channel Group.

-

Mix Presentation: Provides rendering algorithms for rendering the Audio Element to popular loudspeaker layouts and headphones, and the loudness information of the 3D audio signal.

Example 2: UC2 with two 3D audio signals = 5.1.2ch and Stereo.

-

Audio Substream: The L and R channels are coded as one audio stream, the Left surround (Ls) and Right surround (Rs) channels as one audio stream, the Ltf and Rtf channels as one audio stream, the Centre channel as one audio stream, and the LFE channel as one audio stream.

-

Audio Element 1 (5.1.2ch): Consists of 5 Audio Substreams which are grouped into one Channel Group.

-

Audio Element 2 (Stereo): Consists of 1 Audio Substream which is grouped into one Channel Group.

-

Parameter Substream 1-1: Contains mixing parameter values that are applied to Audio Element 1 by considering the home environment.

-

Parameter Substream 1-2: Contains mixing parameter values that are applied to Audio Element 2 by considering the home environment.

-

Mix Presentation: Provides rendering algorithms for rendering Audio Elements 1 & 2 to popular loudspeaker layouts, mixing information based on Parameter Substreams 1-1 & 1-2, and loudness information of the Rendered Mix Presentation.

Example 3: UC3 with two 3D audio signals = First Order Ambisonics (FOA) and Non-diegetic Stereo.

-

Audio Substream: The L and R channels are coded as one audio stream and each channel of the FOA signal as one audio stream.

-

Audio Element 1 (FOA): Consists of 4 Audio Substreams which are grouped into one Channel Group.

-

Audio Element 2 (Non-diegetic Stereo): Consists of 1 Audio Substream which is grouped into one Channel Group.

-

Parameter Substream 1-1: Contains mixing parameter values that are applied to Audio Element 1 by considering the mobile environment.

-

Parameter Substream 1-2: Contains mixing parameter values that are applied to Audio Element 2 by considering the mobile environment.

-

Mix Presentation: Provides rendering algorithms for rendering Audio Elements 1 & 2 to popular loudspeaker layouts and headphones, mixing information based on Parameter Substreams 1-1 & 1-2, and loudness information of the Rendered Mix Presentation.

Example 4: UC4 with four 3D audio signals = 5.1.2ch and 3 Stereo dialogues for English/Spanish/Korean.

-

Audio Substream: The L and R channels are coded as one audio stream, the Left surround (Ls) and Right surround (Rs) channels as one audio stream, the Ltf and Rtf channels as one audio stream, the Centre channel as one audio stream, and the LFE channel as one audio stream.

-

Audio Element 1 (5.1.2ch): Consists of 5 Audio Substreams which are grouped into one Channel Group.

-

Audio Element 2 (Stereo dialogue for English): Consists of 1 Audio Substream which is grouped into one Channel Group.

-

Audio Element 3 (Stereo dialogue for Spanish): Consists of 1 Audio Substream which is grouped into one Channel Group.

-

Audio Element 4 (Stereo dialogue for Korean): Consists of 1 Audio Substream which is grouped into one Channel Group.

-

Parameter Substream 1-1: Contains mixing parameter values that are applied to Audio Element 1 by considering to be mixed with Audio Element 2, 3, or 4.

-

Parameter Substream 1-2: Contains mixing parameter values that are applied to Audio Element 2, 3, or 4 by considering to be mixed with Audio Element 1.

-

Mix Presentation 1: Provides rendering algorithms for rendering Audio Elements 1 & 2 to popular loudspeaker layouts and headphones, mixing information based on Parameter Substreams 1-1 & 1-2, content language information (English) for Audio Element 2, and loudness information of the Rendered Mix Presentation.

-

Mix Presentation 2: Provides rendering algorithms for rendering Audio Elements 1 & 3 to popular loudspeaker layouts and headphones, mixing information based on Parameter Substreams 1-1 & 1-2, content language information (Spanish) for Audio Element 3, and loudness information of the Rendered Mix Presentation.

-

Mix Presentation 3: Provides rendering algorithms for rendering Audio Elements 1 & 4 to popular loudspeaker layouts and headphones, mixing information based on Parameter Substreams 1-1 & 1-2, content language information (Korean) for Audio Element 4, and loudness information of the Rendered Mix Presentation.

2. Immersive Audio Model

2.1. Model Overview

This specification defines a model for representing Immersive Audio contents based on Audio Substreams contributing to Audio Elements meant to be rendered and mixed to form one or more presentations as depicted in the figure below.

The model comprises a number of coded Audio Substreams and the metadata that describes how to decode, render, and mix the Audio Substreams for playback. The model itself is codec-agnostic; any supported audio codec MAY be used to code the Audio Substreams.

The model includes one or more Audio Elements, each of which consists of one or more Audio Substreams. The Audio Substreams that make up an Audio Element are grouped into one or more Channel Groups. The model further includes Mix Presentations and Parameter Substreams.

The term 3D audio signal means a representation of sound that incorporates additional information beyond traditional stereo or surround sound formats such as Ambisonics (Scene-based audio), Object-based audio and Channel-based audio (e.g., 3.1.2ch or 7.1.4ch).

The term channel means a component of Scene-based audio, a component of Object-based audio, or a component of Channel-based audio. When used in the context of Channel-based audio, it refers to loudspeaker-based channels.

The term Immersive Audio (IA) means the combination of 3D audio signals recreating a sound experience close to that of a natural environment.

The term Audio Substream means a sequence of audio samples, which MAY be encoded with any compatible audio codec.

The term Channel Group means a set of Audio Substream(s) which is(are) able to provide a spatial resolution of audio contents by itself or which is(are) able to provide an enhanced spatial resolution of audio contents by combining with the preceding Channel Groups.

The term Audio Element means a 3D audio signal, and is constructed from one or more Audio Substreams (grouped into one or more Channel Groups) and the metadata describing them. The Audio Substreams associated with one Audio Element use the same audio codec.

The term Mix Presentation means a series of processes to present Immersive Audio contents to end-users by using Audio Element(s). It contains metadata that describes how the Audio Element(s) is(are) rendered and mixed together for playback through physical loudspeakers or headphones, as well as loudness information.

The term Parameter Substream means a sequence of parameter values that are associated with the algorithms used for reconstructing, rendering, and mixing. It is applied to its associated Audio Element or Mix Presentation. Parameter Substreams MAY change their values over time and MAY further be animated; for example, any changes in values MAY be smoothed over some time duration. As such, they MAY be viewed as a 1D signal with different metadata specified for different time durations.

The term Rendered Mix Presentation means a 3D audio signal after the Audio Element(s) defined in a Mix Presentation is(are) rendered and mixed together for playback through physical loudspeakers or headphones.

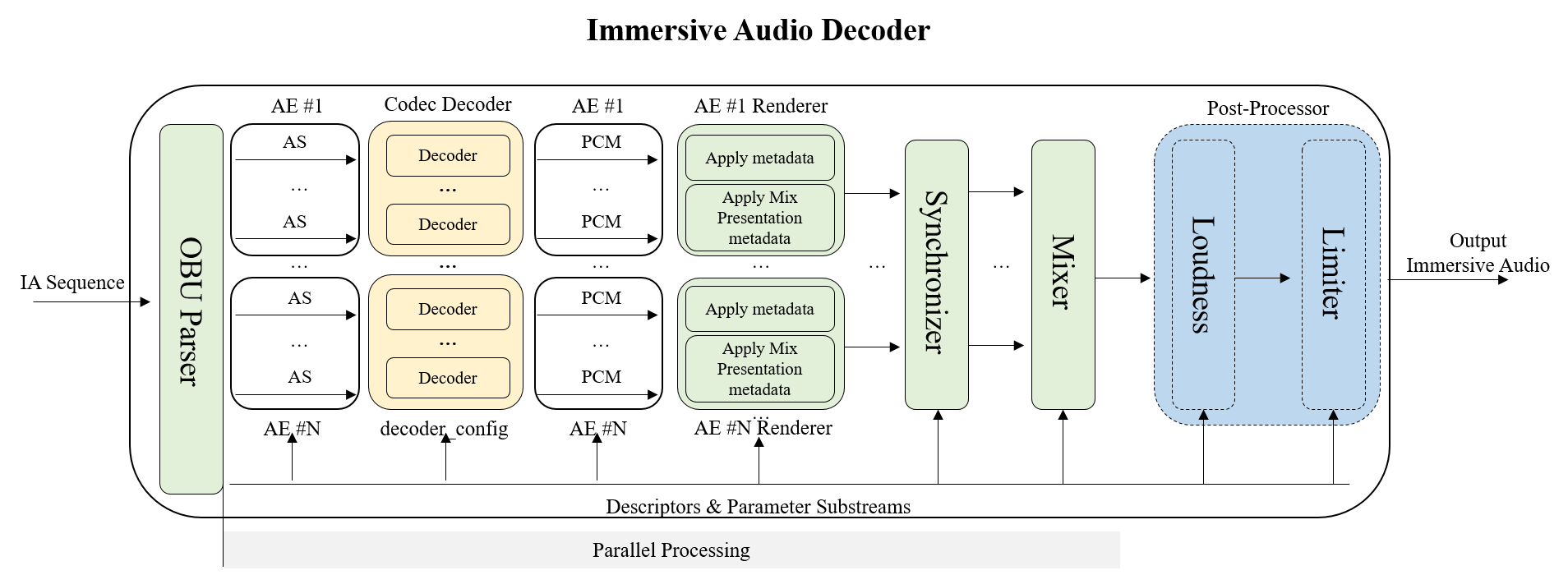

2.2. Architecture

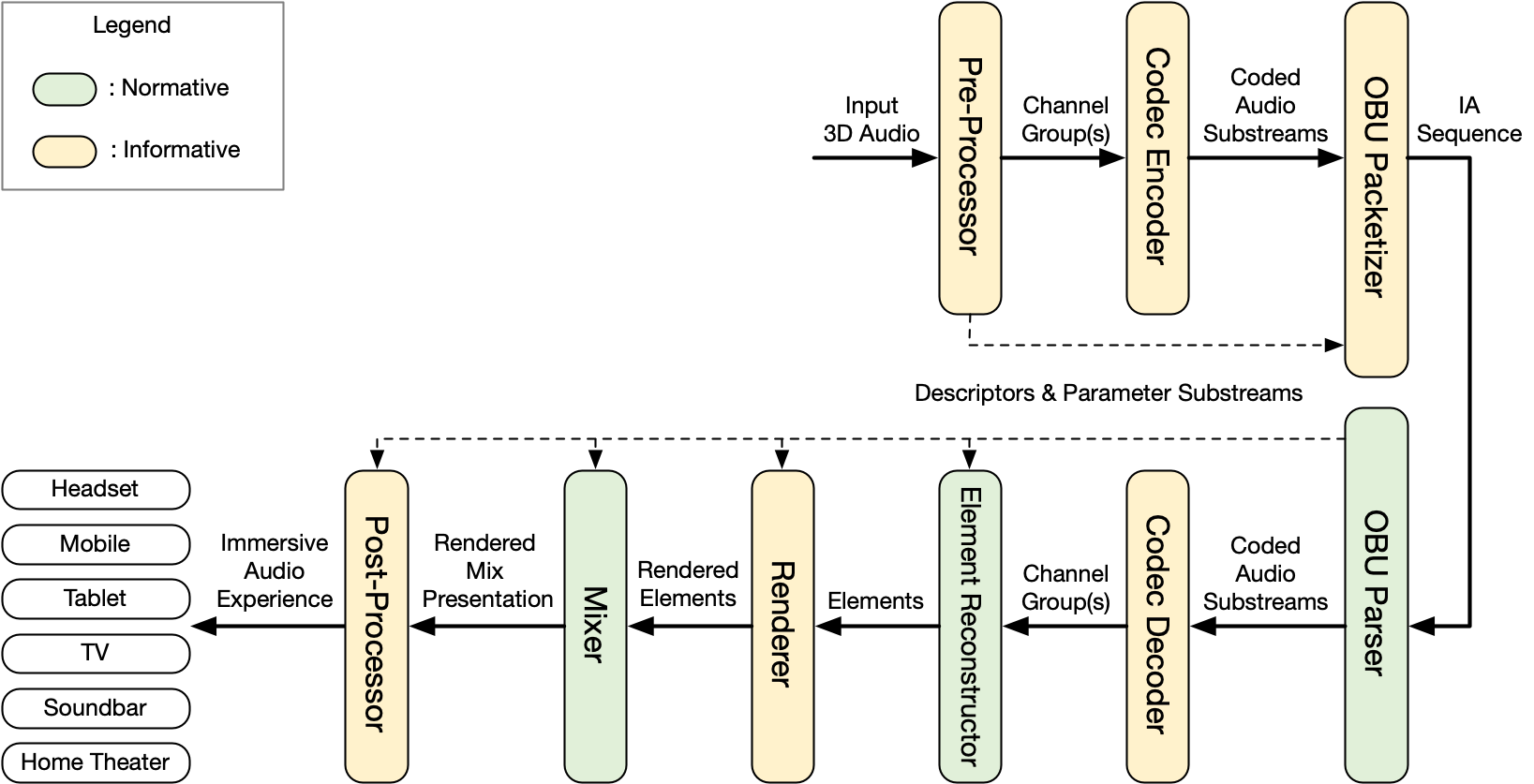

Based on the model, this specification defines the Immersive Audio Model and Formats (IAMF) architecture as depicted in the figure below.

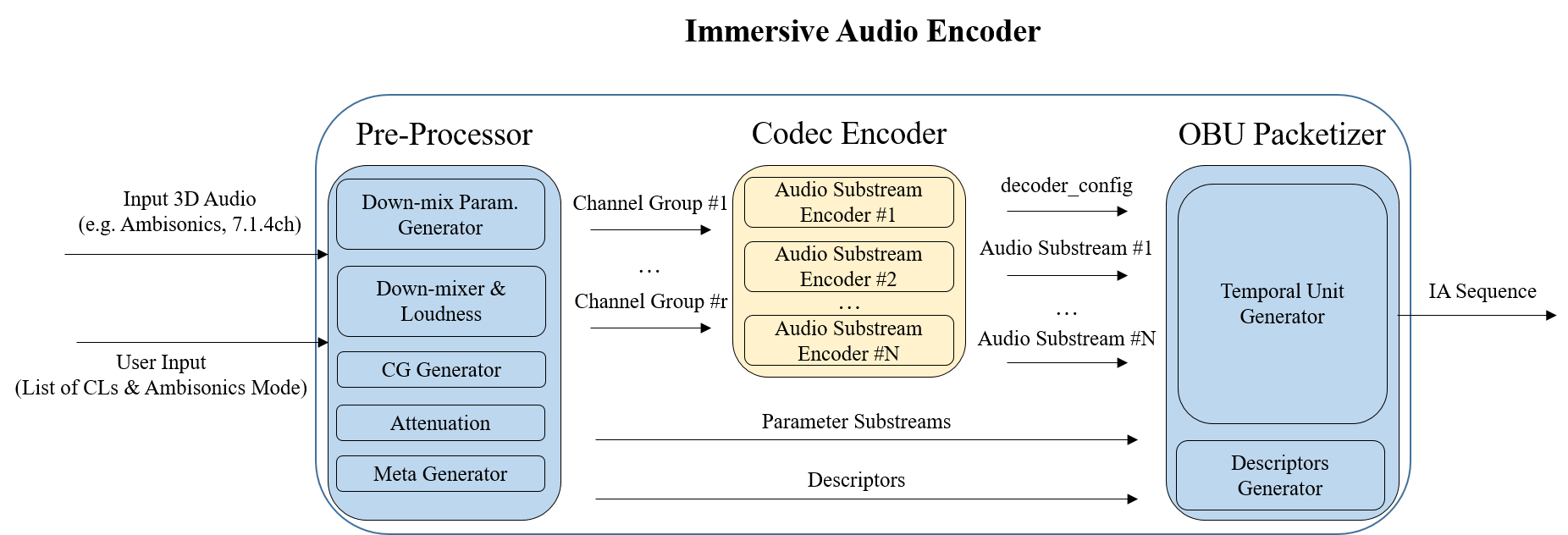

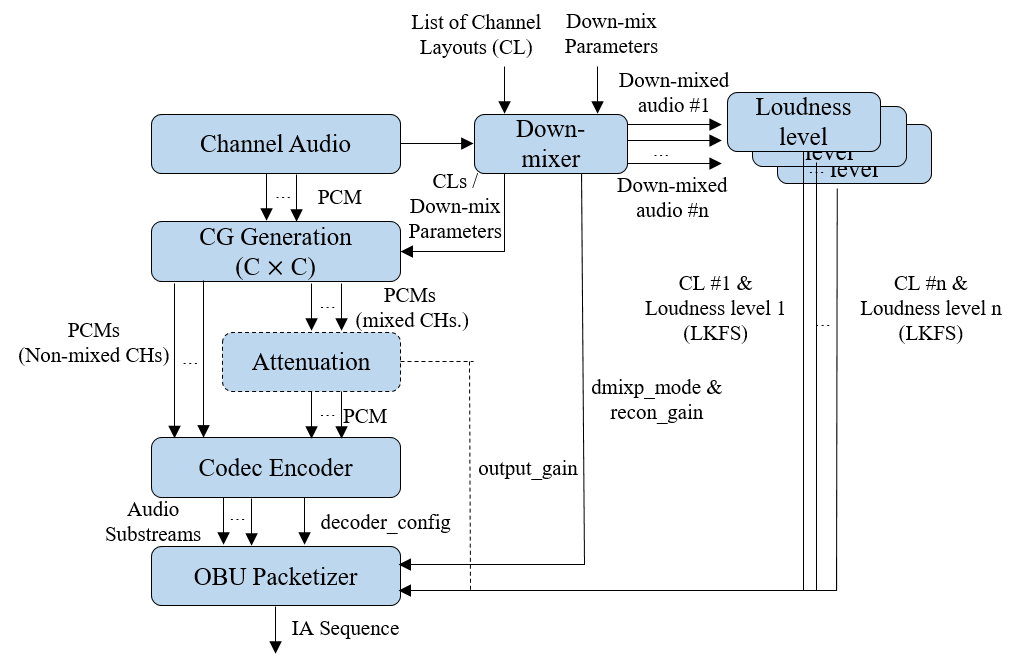

For a given input 3D audio signal,

-

A Pre-Processor generates the Channel Group(s), Descriptors and Parameter Substream(s).

-

A Codec Encoder generates the coded Audio Substream(s).

-

An OBU Packetizer generates an IA Sequence from the coded Audio Substream(s), Descriptors and Parameter Substream(s).

-

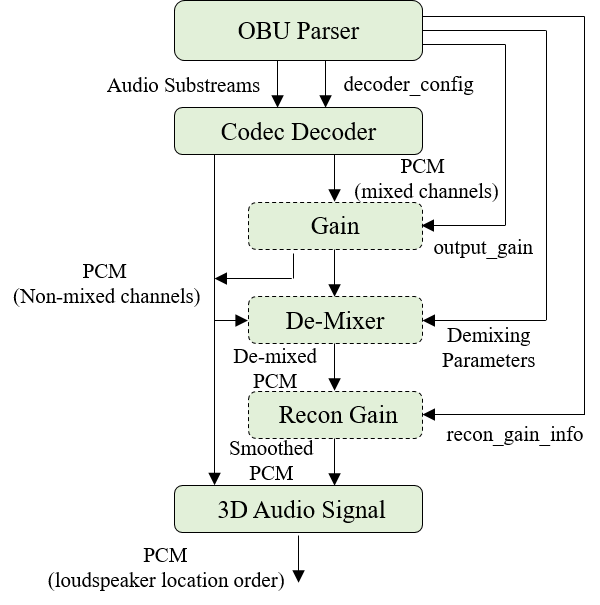

An OBU Parser outputs the coded Audio Substream(s) and the Parameter Substream(s) from the IA Sequence.

-

A Codec Decoder outputs decoded Channel Group(s) after decoding the coded Audio Substream(s).

-

An Element Reconstructor re-assembles the Audio Elements by combining the Channel Group(s) guided by Descriptors and Parameter Substream(s).

-

A Renderer can be used to render the Audio Elements to a multi-channel or binaural format based on Descriptors.

-

A Mixer sums the rendered Audio Elements and applies further mixing parameters guided by the Descriptors and the Parameter Substream(s).

-

A Post-Processor outputs an Immersive Audio by using the Channel Group(s), the Descriptors, and the Parameter Substream(s).

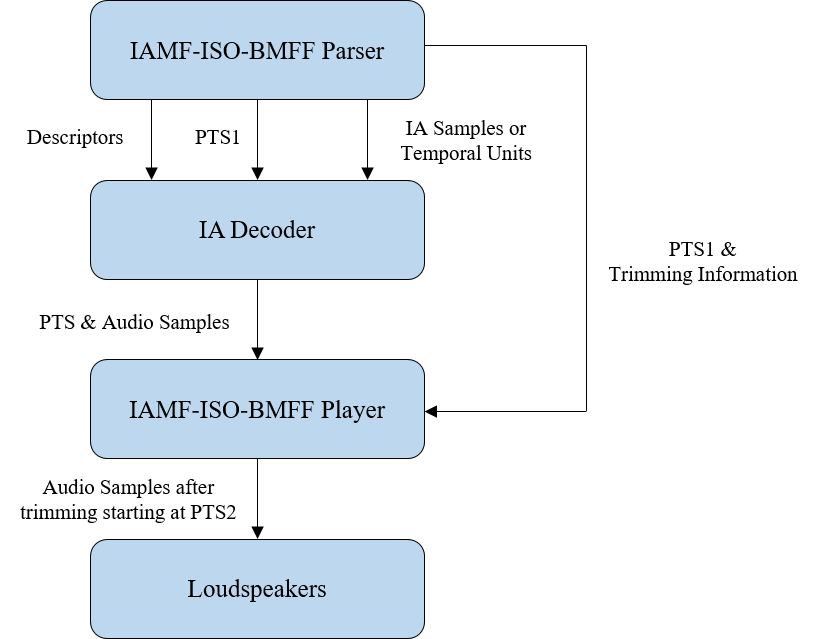

An IAMF generation processing including the Pre-Processor, the Channel Group(s), the Codec Encoder, and the OBU Packetizer are defined in § 10.1 Annex A: IAMF Generation Process (Informative). The IA Sequence is defined in § 5.1 IA Sequence. An IAMF processing including the OBU Parser, the Codec Decoder, the Element Reconstructor, the Renderer, the Mixer, and the Post-Processor are defined in § 7 IAMF Processing.

Although not shown in the figure above, the IA Sequence MAY be encapsulated by a file packager, such as the ISO-BMFF Encapsulation, to output an IAMF file (ISO-BMFF file). Then, a file parser, such as the ISO-BMFF Parser, decapsulates it to output the IA Sequence. The ISO-BMFF Encapsulation, IAMF file (ISO-BMFF file), and ISO-BMFF Parser are defined in § 6 ISO-BMFF IAMF Encapsulation.

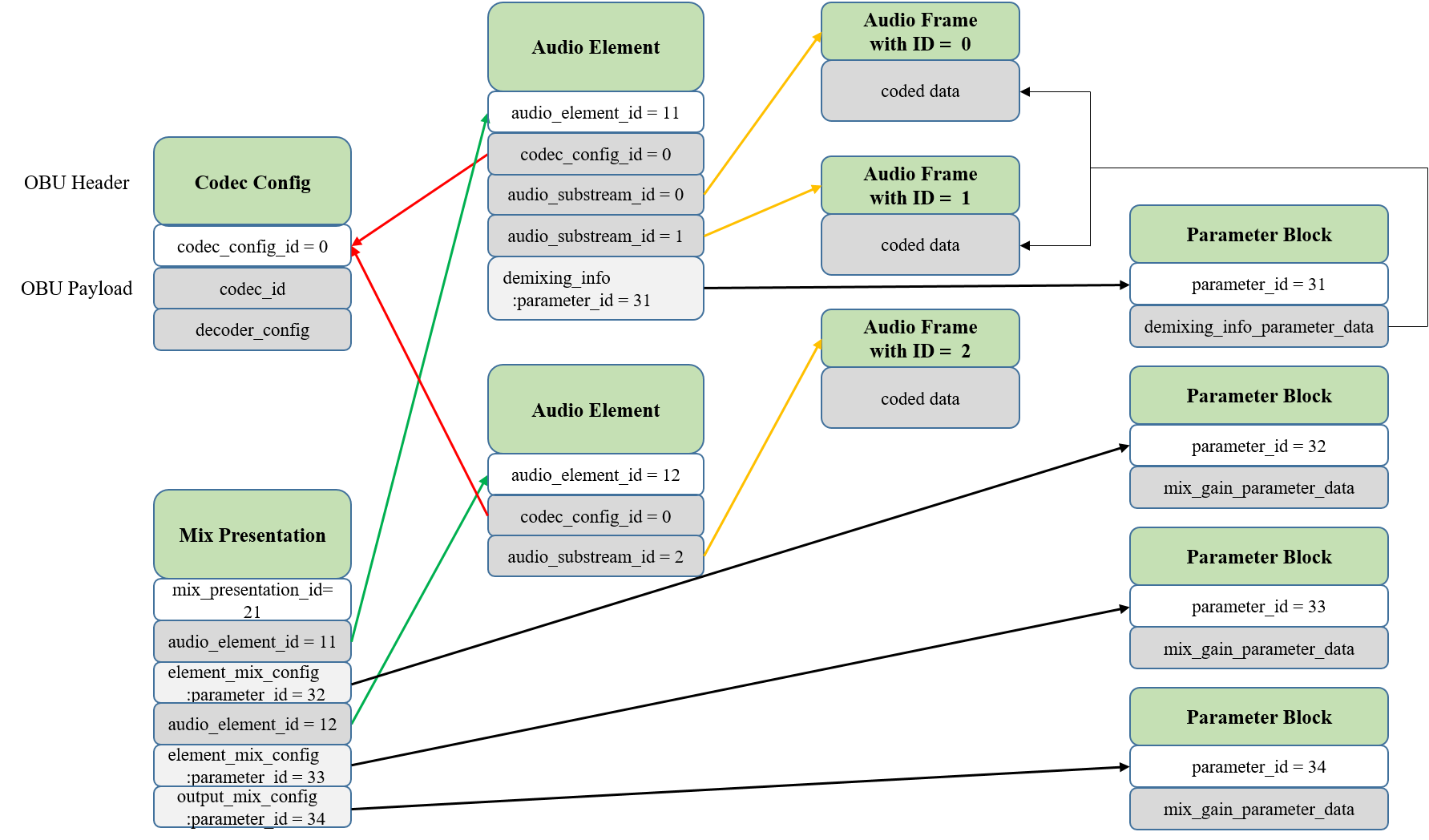

2.3. Bitstream Structure

2.3.1. Overview

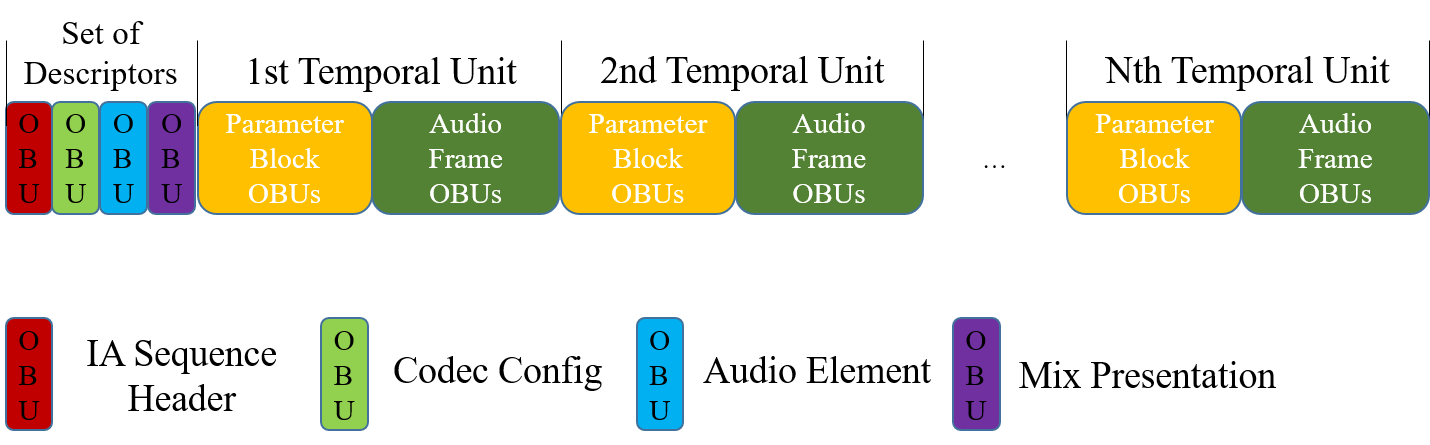

An IA Sequence is a bitstream to represent Immersive Audio contents and consists of Descriptors and IA Data.

The metadata in the Descriptors and IA Data are packetized into individual Open Bitstream Units (OBU)s. The term Open Bitstream Unit (OBU) is the concrete, physical unit used to represent the components in the model. In this specification, the term IA OBU can be used interchangeably with OBU.

The normative definitions for an IA Sequence are defined in § 5.1 IA Sequence.

2.3.2. Categorization and Use of Immersive Audio OBUs

2.3.2.1. Descriptors

Descriptors contain all the information that is required to set up and configure the decoders, reconstruction algorithms, renderers, and mixers. Descriptors do not contain audio signals.

-

The IA Sequence Header OBU indicates the start of a full IA Sequence description and contains information related to profiles.

-

The Codec Config OBU provides information which is required for setting up a decoder for a coded Audio Substream.

-

The Audio Element OBU provides information which is required for combining one or more Audio Substreams to reconstruct an Audio Element.

-

The Mix Presentation OBU provides information which is required for rendering and mixing one or more Audio Elements to generate the final Immersive Audio output.

-

Multiple Mix Presentations can be defined as alternatives to each other within the same IA Sequence. Furthermore, the choice of which Mix Presentation to use at playback is left to the user. For example, multi-language support is implemented by defining different Mix Presentations, where the first mix describes the use of the Audio Element with English dialogue, and the second mix describes the use of the Audio Element with French dialogue.

-

2.3.2.2. IA Data

IA Data contains the time-varying data that is required in the generation of the final Immersive Audio output.

-

The Audio Frame OBU provides the coded audio frame for an Audio Substream. Each frame has an implied start timestamp and an explicitly defined duration. A coded Audio Substream is represented as a sequence of Audio Frame OBUs with the same identifier, in time order.

-

The Parameter Block OBU provides the parameter values in a block for a Parameter Substream. Each block has an implied start timestamp and an explicitly defined duration. A time-varying Parameter Substream is represented as a sequence of parameter values in Parameter Block OBUs with the same identifier, in time order.

-

The Temporal Delimiter OBU identifies the Temporal Units. It MAY or MAY NOT be present in IA Sequence. If present, the first OBU of every Temporal Unit is the Temporal Delimiter OBU.

2.4. Timing Model

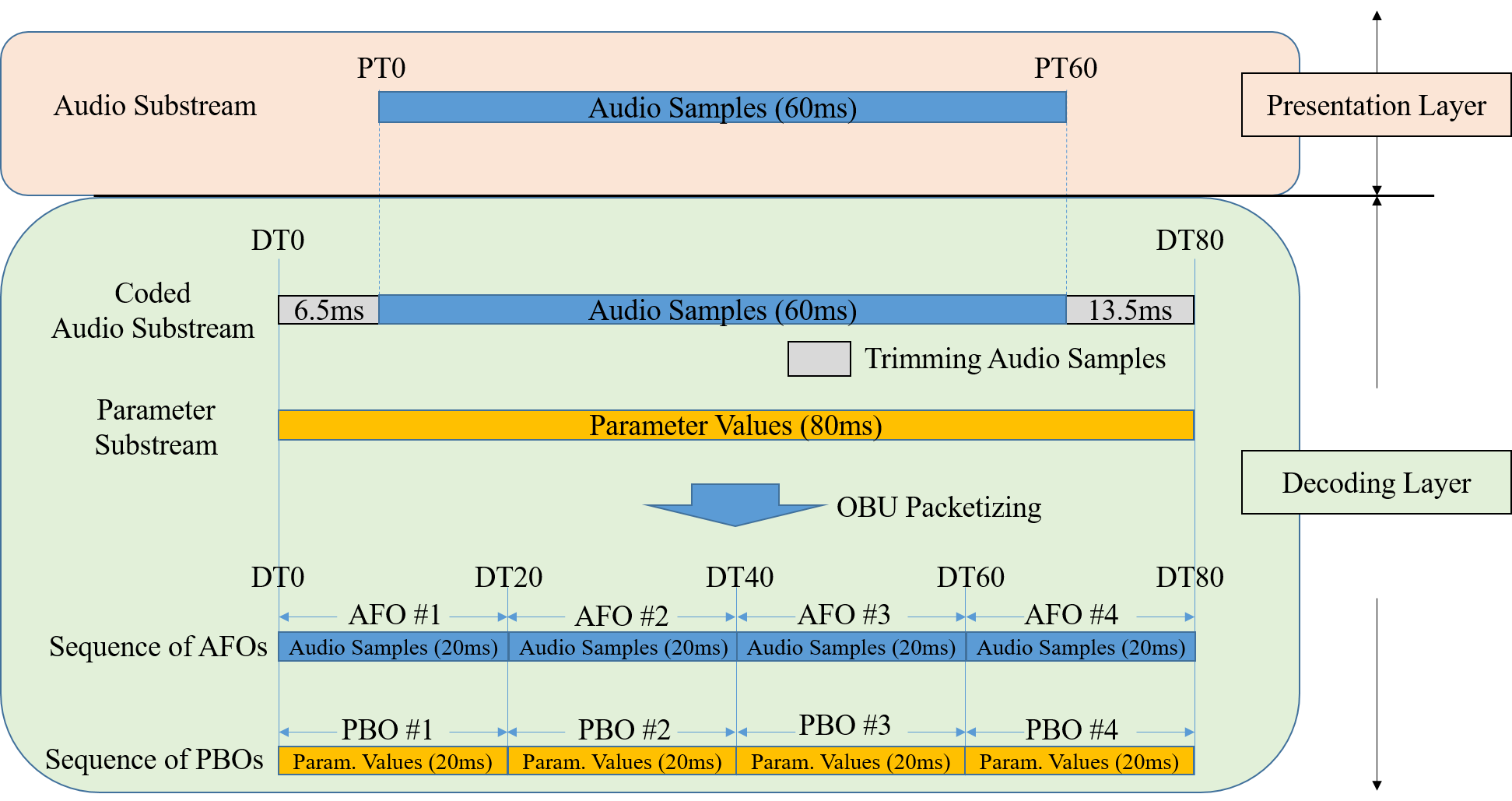

A coded Audio Substream is made of consecutive Audio Frame OBUs. Each Audio Frame OBU is made of audio samples at a given sample rate. The decode duration of an Audio Frame OBU is the number of audio samples divided by the sample rate. The presentation duration of an Audio Frame OBU is the number of audio samples remaining after trimming divided by the sample rate. The decode start time (respectively presentation start time) of an Audio Frame OBU is the sum of the decode durations (respectively presentation durations) of previous Audio Frame OBUs in the IA Sequence, or 0 otherwise. The decode duration (respectively presentation duration) of a coded Audio Substream is the sum of the decode durations (respectively presentation durations) of all its Audio Frame OBUs. The decode start time of an Audio Substream is the decode start time of its first Audio Frame OBU. The presentation start time of an Audio Substream is the presentation start time of its first Audio Frame OBU which is not entirely trimmed.

A Parameter Substream is made of consecutive Parameter Block OBUs. Each Parameter Block OBU is made of parameter values at a given sample rate. The decode duration of a Parameter Block OBU is the number of parameter values divided by the sample rate. The decode start time of a Parameter Block OBU is the sum of the decode duration of previous Parameter Block OBUs if any, 0 otherwise. The decode duration of a Parameter Substream is the sum of all its Parameter Block OBUs' decode durations. The start time of a Parameter Substream is the decode start time of its first Parameter Block OBU. When all parameter values in a Parameter Substream are constant, no Parameter Block OBUs MAY be present in the IA Sequence.

Within an Audio Element, the presentation start times of all Audio Substreams coincide and are the presentation start time of the Audio Element. All Audio Substreams have the same presentation duration which is the presentation duration of the Audio Element.

-

The decode start times of all coded Audio Substreams and all Parameter Substreams coincide and are the decode start time of the Audio Element.

-

All coded Audio Substreams and all Parameter Substreams have the same decode duration which is the decode duration of the Audio Element.

Within a Mix Presentation, the presentation start time of all Audio Elements coincide and all Audio Elements have the same duration defining the duration of the Mix Presentation.

Within an IA Sequence, all Mix Presentations have the same duration, defining the duration of the IA Sequence, and have the same presentation start time defining the presentation start time of the IA Sequence.

The term Temporal Unit conceptually means a set of all Audio Frame OBUs with the same decode start time and the same duration from all coded Audio Substreams and all non-redundant Parameter Block OBUs with the decode start time within the duration.

The figure below shows an example of the Timing Model in terms of the decode start times and durations of the coded Audio Substream and Parameter Substream.

NOTE: For a given decoded Audio Substream (before trimming) and its associated Parameter Substream(s), a decoder may apply trimming in 1 of 2 ways:

1) The decoder processes the Audio Substream using the Parameter Substream(s), and then trims the processed audio samples.

2) The decoder trims both the Audio Substream and the Parameter Substream(s). Then, the decoder processes the trimmed Audio Substream using the trimmed Parameter Substream(s).

3. Open Bitstream Unit (OBU) Syntax and Semantics

The IA Sequence uses the OBU syntax.

This section specifies the OBU syntax elements and their semantics.

3.1. Immersive Audio OBU Syntax and Semantics

OBUs are structured with an OBU Header and an OBU payload.

The OBU Header and all OBU payloads, including the Reserved OBU, are byte aligned.

Syntax

class IAOpenBitstreamUnit() {

OBUHeader obu_header;

if (obu_type == OBU_IA_Sequence_Header)

IASequenceHeaderOBU ia_sequence_header_obu;

else if (obu_type == OBU_IA_Codec_Config)

CodecConfigOBU codec_config_obu;

else if (obu_type == OBU_IA_Audio_Element)

AudioElementOBU audio_element_obu;

else if (obu_type == OBU_IA_Mix_Presentation)

MixPresentationOBU mix_presentation_obu;

else if (obu_type == OBU_IA_Parameter_Block)

ParameterBlockOBU parameter_block_obu;

else if (obu_type == OBU_IA_Temporal_Delimiter)

TemporalDelimiterOBU temporal_delimiter_obu;

else if (obu_type == OBU_IA_Audio_Frame)

AudioFrameOBU audio_frame_obu(true);

else if (obu_type >= 6 and <= 23)

AudioFrameOBU audio_frame_obu(false);

else if (obu_type >=24 and <= 30)

ReservedOBU reserved_obu;

}

Semantics

If the syntax element obu_type is equal to OBU_IA_Sequence_Header, an ordered series of OBUs is presented to the decoding process as a string of bytes.

3.2. OBU Header Syntax and Semantics

This section specifies the format of the OBU Header.

Syntax

class OBUHeader() {

unsigned int (5) obu_type;

unsigned int (1) obu_redundant_copy;

unsigned int (1) obu_trimming_status_flag;

unsigned int (1) obu_extension_flag;

leb128() obu_size;

if (obu_trimming_status_flag) {

leb128() num_samples_to_trim_at_end;

leb128() num_samples_to_trim_at_start;

}

if (obu_extension_flag) {

leb128() extension_header_size;

unsigned int (8 x extension_header_size) extension_header_bytes;

}

}

Semantics

obu_type specifies the type of data structure contained in the OBU payload.

obu_type: Name of obu_type 0 : OBU_IA_Codec_Config 1 : OBU_IA_Audio_Element 2 : OBU_IA_Mix_Presentation 3 : OBU_IA_Parameter_Block 4 : OBU_IA_Temporal_Delimiter 5 : OBU_IA_Audio_Frame 6~23 : OBU_IA_Audio_Frame_ID0 to OBU_IA_Audio_Frame_ID17 24~30 : Reserved for future use 31 : OBU_IA_Sequence_Header

obu_redundant_copy indicates whether this OBU is a redundant copy of the previous OBU with the same obu_type in the IA Sequence. A value of 1 indicates that it is a redundant copy, while a value of 0 indicates that it is not.

It SHALL always be set to 0 for the following obu_type values:

-

OBU_IA_Temporal_Delimiter

-

OBU_IA_Audio_Frame

-

OBU_IA_Audio_Frame_ID0 to OBU_IA_Audio_Frame_ID17

If a decoder encounters an OBU with obu_redundant_copy = 1, and it has also received the previous non-redundant OBU, it MAY ignore the redundant OBU. If the decoder has not received the previous non-redundant OBU, it SHALL treat the redundant copy as a non-redundant OBU and process the OBU accordingly.

obu_trimming_status_flag indicates whether this OBU has audio samples to be trimmed. It SHALL be set to 0 or 1 if the obu_type is set to OBU_IA_Audio_Frame or OBU_IA_Audio_Frame_ID0 to OBU_IA_Audio_Frame_ID17. Otherwise, it SHALL be set to 0.

For a given coded Audio Substream,

-

If an Audio Frame OBU has its num_samples_to_trim_at_start field set to a non-zero value N, the decoder SHALL discard the first N audio samples.

-

If an Audio Frame OBU has its num_samples_to_trim_at_end field set to a non-zero value N, the decoder SHALL discard the last N audio samples.

NOTE: Because of possible coding dependencies, discarding a sample can sometimes mean still needing to decode the entire audio frame.

-

For a given Audio Frame OBU, the sum of num_samples_to_trim_at_start and num_samples_to_trim_at_end SHALL be less than or equal to the number of samples in the Audio Frame OBU (i.e., num_samples_per_frame).

NOTE: This means that if one of the values is set to the number of samples in the Audio Frame OBU (i.e., num_samples_per_frame), the other value is set to 0.

-

When num_samples_to_trim_at_start is non-zero, all Audio Frame OBUs with the same audio_substream_id, and preceding this OBU back until the Codec Config OBU defining this Audio Substream, SHALL have their num_samples_to_trim_at_start field equal to the number of samples in the corresponding Audio Frame OBU (i.e., num_samples_per_frame).

-

When num_samples_to_trim_at_end is non-zero in an Audio Frame OBU, there SHALL be no subsequent Audio Frame OBU with the same audio_substream_id until a non-redundant Codec Config OBU defining an Audio Substream with the same audio_substream_id.

obu_extension_flag indicates whether the extension_header_size field is present. If it is set to 0, the extension_header_size field SHALL NOT be present. Otherwise, the extension_header_size field SHALL be present.

NOTE: A future version of the specification may use this flag to specify an extension header field by setting obu_extension_flag = 1 and setting the size of the extended header to extension_header_size.

obu_size indicates the size in bytes of the OBU immediately following the obu_size field. If the obu_trimming_status_flag and/or obu_extension_flag fields are set to 1, obu_size SHALL include the sizes of the additional fields. The obu_size MAY be greater than the size needed to represent the OBU syntax. Parsers SHOULD ignore bytes past the OBU syntax that they recognize.

num_samples_to_trim_at_end indicates the number of samples that need to be trimmed from the end of the samples in this Audio Frame OBU.

num_samples_to_trim_at_start indicates the number of samples that need to be trimmed from the start of the samples in this Audio Frame OBU.

extension_header_size indicates the size in bytes of the extension header immediately following this field.

extension_header_bytes indicates the byte representations of the syntaxes of the extension header. Parsers that don’t understand these bytes SHOULD ignore them.

3.3. Reserved OBU Syntax and Semantics

Paresers SHOULD ignore Reserved OBUs.

NOTE: Future versions of the specification may define syntax and semantics for an obu_type value, making it no longer a Reserved OBU for those parsers compliant with these future versions.

3.4. IA Sequence Header OBU Syntax and Semantics

The IA Sequence Header OBU is used to indicate the start of an IA Sequence, i.e., the first OBU in an IA Sequence SHALL have obu_type = OBU_IA_Sequence_Header. This section specifies the payload format of the IA Sequence Header OBU.

NOTE: When an IA Sequence is stored in a file, the IA Sequence Header OBU can be used to identify that the file contains an IA Sequence.

This OBU MAY be placed frequently within one single IA Sequence for an application such as broadcasting or multicasting. In that case, all IA Sequence Header OBUs except the first one SHALL be marked as redundant (i.e., obu_redundant_copy = 1). So, if a decoder encounters a non-redundant IA Sequence Header OBU (i.e., obu_redundant_copy = 0), and it has also received the previous IA Sequence Header OBU, the non-redundant IA Sequence Header OBU indicates the start of a new IA Sequence.

Syntax

class IASequenceHeaderOBU() {

unsigned int (32) ia_code;

unsigned int (8) primary_profile;

unsigned int (8) additional_profile;

}

Semantics

ia_code is a ‘four-character code’ (4CC), iamf.

NOTE: When IA OBUs are delivered over a protocol that does not provide explicit IA Sequence boundaries, a parser may locate the IA Sequence start by searching for the code iamf preceded by specific OBU Header values. For example, by assuming that obu_extension_flag is set to 0 and because obu_trimming_status_flag is set to 0 for an IA Sequence Header OBU, the OBU Header can be either 0xF806 or 0xFC06.

primary_profile indicates the primary profile that this IA Sequence complies with. Parsers SHOULD discard the IA Sequence if they do not support the value indicated here.

The mappings below are applied for both primary_profile and additional_profile.

-

0: Simple Profile

-

1: Base Profile

-

2: Base-Enhanced Profile

-

3~255: Reserved for future profiles

additional_profile indicates an additional profile that this IA Sequence complies with. If an IA Sequence only complies with the primary_profile, this field SHALL be set to the same value as primary_profile.

NOTE: If a future version defines a new profile, e.g., HypotheticalProfile, that is backward compatible with the Base-Enhanced Profile, for example by defining new OBUs that would be ignored by the Base-Enhanced-compatible parser, an IA writer can decide to set the primary_profile to "Base-Enhanced Profile" while setting the additional_profile to "HypotheticalProfile". This way an old processor will know it can parse and produce an acceptable rendering, while a new processor still knows it can produce a better result because it will not ignore the additional features.

3.5. Codec Config OBU Syntax and Semantics

The Codec Config OBU provides information on how to set up a decoder for a coded Audio Substream.

The CodecConfig() class provides codec-specific configurations for the decoder.

This section specifies the payload format of the Codec Config OBU and the CodecConfig() class.

Syntax

class CodecConfigOBU() {

leb128() codec_config_id;

CodecConfig codec_config;

}

class CodecConfig() {

unsigned int (32) codec_id;

leb128() num_samples_per_frame;

signed int (16) audio_roll_distance;

DecoderConfig decoder_config(codec_id);

}

Semantics

codec_config_id defines an identifier for a codec configuration. Within an IA Sequence, there SHALL be one unique codec_config_id per codec. There SHALL be exactly one Codec Config OBU with a given identifier in a set of Descriptors. Audio Elements use this identifier to indicate that its corresponding Audio Substreams are coded with this codec configuration.

codec_config is an instance of the CodecConfig() class, which provides codec-specific information for setting up the decoder.

codec_id indicates a ‘four-character code’ (4CC) to identify the codec used to generate the coded Audio Substreams. This specification supports the following four codec_id values defined below:

-

Opus: All coded Audio Substreams referred to by all Audio Elements with this codec configuration SHALL comply with the [RFC-6716] specification and the decoder_config structure SHALL comply with the constraints given in § 3.11.1 OPUS Specific. -

mp4a: All coded Audio Substreams referred to by all Audio Elements with this codec configuration SHALL comply with the [AAC] specification and the decoder_config structure SHALL comply with the constraints given in § 3.11.2 AAC-LC Specific. -

fLaC: All coded Audio Substreams referred to by all Audio Elements with this codec configuration SHALL comply with the [FLAC] specification and the decoder_config structure SHALL comply with the constraints given in § 3.11.3 FLAC Specific. -

ipcm: All coded Audio Substreams referred to by all Audio Elements with this codec configuration SHALL contain linear PCM (LPCM) audio samples and the decoder_config structure SHALL comply with the constraints given in § 3.11.4 LPCM Specific.

Parsers SHOULD ignore Codec Config OBUs with a codec_id that they don’t support.

NOTE: Derived specifications or future versions of this specification may support additional codecs.

NOTE: ipcm should not be confused with lpcm, which is another 4CC to identify codecs in other container formats (e.g., QuickTime).

num_samples_per_frame indicates the frame length, in samples, of the audio_frame provided in the audio_frame_obu. It SHALL NOT be set to zero. If the decoder_config structure for a given codec specifies a value for the frame length, the two values SHALL be equal.

audio_roll_distance indicates how many audio frames prior to the current audio frame need to be decoded (and the decoded samples discarded) to set the decoder in a state that will produce the correct decoded audio signal. It SHALL always be a negative value or zero. For some audio codecs, even if an audio frame can be decoded independently, the decoded signal after decoding only that frame MAY not represent a correct, decoded audio signal, even ignoring compression artifacts. This can be due to overlap transforms. While potentially acceptable when starting to decode an Audio Substream, it MAY be problematic when automatically switching between similar Audio Substreams of different quality and/or bitrate.

-

It SHALL be set to \(-R\) when codec_id is set to

Opus, where [R = \left\lceil{\frac{3840}{\text{num_samples_per_frame}}}\right\rceil.\] -

It SHALL be set to -1 when codec_id is set to

mp4a. -

It SHALL be set to 0 when codec_id is set to

fLaCoripcm.

decoder_config is an instance of the DecoderConfig() class, which specifies the set of codec parameters required to decode the Audio Substream. It is byte aligned.

3.6. Audio Element OBU Syntax and Semantics

The Audio Element OBU provides information on how to combine one or more Audio Substreams to reconstruct an Audio Element. This section specifies the payload format of the Audio Element OBU.

Additionally, the following parameter definitions are used in the Audio Element OBU, and their syntax structures are specified in this section:

-

DemixingParamDefinition() and DefaultDemixingInfoParameterData() provide the parameter definitions for demixing info, which is required for reconstructing a scalable channel audio representation.

-

ReconGainParamDefinition() provides the parameter definition for recon gain, which is required for reconstructing a scalable channel audio representation.

Syntax

class AudioElementOBU() {

leb128() audio_element_id;

unsigned int (3) audio_element_type;

unsigned int (5) reserved_for_future_use;

leb128() codec_config_id;

leb128() num_substreams;

for (i = 0; i < num_substreams; i++) {

leb128() audio_substream_id;

}

leb128() num_parameters;

for (i = 0; i < num_parameters; i++) {

leb128() param_definition_type;

if (param_definition_type == PARAMETER_DEFINITION_DEMIXING) {

DemixingParamDefinition demixing_info;

}

else if (param_definition_type == PARAMETER_DEFINITION_RECON_GAIN) {

ReconGainParamDefinition recon_gain_info;

}

else if (param_definition_type > 2) {

leb128() param_definition_size;

unsigned int (8 x param_definition_size) param_definition_bytes;

}

}

if (audio_element_type == CHANNEL_BASED) {

ScalableChannelLayoutConfig scalable_channel_layout_config;

} else if (audio_element_type == SCENE_BASED) {

AmbisonicsConfig ambisonics_config;

} else {

leb128() audio_element_config_size;

unsigned int (8 x audio_element_config_size) audio_element_config_bytes;

}

}

class DemixingParamDefinition() extends ParamDefinition() {

DefaultDemixingInfoParameterData default_demixing_info_parameter_data;

}

class DefaultDemixingInfoParameterData() extends DemixingInfoParameterData() {

unsigned int (4) default_w;

unsigned int (4) reserved_for_future_use;

}

class ReconGainParamDefinition() extends ParamDefinition() {

}

Semantics

audio_element_id defines an identifier for an Audio Element. Within an IA Sequence, there SHALL be one unique audio_element_id per Audio Element. There SHALL be exactly one Audio Element OBU with a given identifier in a set of Descriptors. Mix Presentations refer to a particular Audio Element using this identifier.

audio_element_type specifies the audio representation of this Audio Element, which is constructed from one or more Audio Substreams. Parsers SHOULD ignore Audio Element OBUs with an audio_element_type that they do not recognize.

audio_element_type: The type of audio representation. 0 : CHANNEL_BASED 1 : SCENE_BASED 2~7 : Reserved for future use

codec_config_id indicates the identifier for the codec configuration which this Audio Element refers to. Parsers SHOULD ignore Audio Element OBUs with a codec_config_id identifying a codec_id that they don’t support.

num_substreams specifies the number of Audio Substreams that are used to reconstruct this Audio Element. It SHALL NOT be set to 0.

audio_substream_id indicates the identifier for an Audio Substream which this Audio Element refers to. When audio_element_type is CHANNEL_BASED, the ordering of audio_substream_ids within this loop SHALL comply with § 3.6.3.3 Ordering of Audio Substream Identifers.

num_parameters specifies the number of Parameter Substreams that are used by the algorithms specified in this Audio Element.

-

When audio_element_type = 0, this field SHALL be set to 0, 1, or 2.

-

When audio_element_type = 1, this field SHALL be set to 0.

-

Parsers SHALL support any value of num_parameters.

NOTE: For a given audio_element_type, a future version of the specification may define a new Parameter Substream which may be ignored by IA decoders compliant with this version of the specification. In that case, a new param_definition_type will be defined in a future version of Audio Element OBU.

param_definition_type specifies the type of the parameter definition. The parameter definition types are listed in the table below, along with their associated parameter definitions.

| param_definition_type | Parameter definition type | Parameter definition |

|---|---|---|

| 0 | PARAMETER_DEFINITION_MIX_GAIN | MixGainParamDefinition() |

| 1 | PARAMETER_DEFINITION_DEMIXING | DemixingParamDefinition() |

| 2 | PARAMETER_DEFINITION_RECON_GAIN | ReconGainParamDefinition() |

-

The type PARAMETER_DEFINITION_MIX_GAIN SHALL NOT be present in Audio Element OBU.

-

The type SHALL NOT be duplicated in one Audio Element OBU.

-

When codec_id =

fLaCoripcm, the type PARAMETER_DEFINITION_RECON_GAIN SHALL NOT be present. -

When num_layers > 1, the type PARAMETER_DEFINITION_RECON_GAIN SHALL be present.

-

When the loudspeaker_layout = 15 or the loudspeaker_layout of the (non-)scalable channel audio (i.e., num_layers = 1) is less than or equal to 3.1.2ch (i.e., Mono, Stereo, or 3.1.2ch), the type PARAMETER_DEFINITION_DEMIXING SHALL NOT be present.

-

When the highest loudspeaker_layout of the scalable channel audio (i.e., num_layers > 1) is greater than 3.1.2ch, both PARAMETER_DEFINITION_DEMIXING and PARAMETER_DEFINITION_RECON_GAIN types SHALL be present.

-

When num_layers = 1 and loudspeaker_layout is greater than 3.1.2ch (i.e., 5.1.2ch, 5.1.4ch, 7.1.2ch, or 7.1.4ch), the type PARAMETER_DEFINITION_DEMIXING MAY be present.

-

An OBU parser SHALL be able to parse param_definition_type = P (where P > 2) and param_definition_size. The OBU parser SHOULD ignore the bytes indicated by param_definition_size that it does not recognize.

demixing_info is an instance of the DemixingParamDefinition() class, which provides the parameter definition for the demixing information, which is used to reconstruct a scalable channel audio representation. The corresponding parameter data to be provided in Parameter Block OBUs with the same parameter_id is specified in the DemixingInfoParameterData() class.

In this parameter definition,

-

parameter_rate SHALL be set to the sample rate of this Audio Element.

-

param_definition_mode SHALL be set to 0.

-

duration SHALL be the same as num_samples_per_frame of this Audio Element.

-

num_subblocks SHALL be set to 1.

-

constant_subblock_duration SHALL be the same as duration.

recon_gain_info is an instance of the ReconGainParamDefinition() class, which provides the parameter definition for the gain value, which is used to reconstruct a scalable channel audio representation. The corresponding parameter data to be provided in Parameter Block OBUs with the same parameter_id is specified in the ReconGainInfoParameterData() class.

In this parameter definition,

-

parameter_rate SHALL be set to the sample rate of this Audio Element.

-

param_definition_mode SHALL be set to 0.

-

duration SHALL be the same as num_samples_per_frame of this Audio Element.

-

num_subblocks SHALL be set to 1.

-

constant_subblock_duration SHALL be same as duration.

param_definition_size indicates the size in bytes of param_definition_bytes.

param_definition_bytes represents reserved bytes for future use when new param_definition_type values are defined. Parsers SHOULD ignore these bytes when they don’t understand the parameter definition.

scalable_channel_layout_config is an instance of the ScalableChannelLayoutConfig() class, which provides the metadata required for combining the Audio Substreams referred to here in order to reconstruct a scalable channel layout.

ambisonics_config is an instance of the AmbisonicsConfig() class, which provides the metadata required for combining the Audio Substreams referred to here in order to reconstruct an Ambisonics layout.

audio_element_config_size indicates the size in bytes of audio_element_config_bytes.

audio_element_config_bytes represents reserved bytes for future use when new audio_element_type values are defined. Parsers SHOULD ignore these bytes when they don’t recognize a particular configuration.

default_demixing_info_parameter_data is an instance of the DefaultDemixingInfoParameterData() class, which provides the default demixing parameter data to apply to all audio samples when there are no Parameter Block OBUs (with the same parameter_id defined in this DemixingParamDefinition()) provided.

-

In this class, w_idx_offset in demixing_info_parameter_data SHALL be ignored.

-

Instead, default_w directly indicates the weight value \(w(k)\).

default_w indicates the weight value \(w(k)\) for the TF2toT2 de-mixer specified in § 7.2.2 De-mixer.

The mapping of default_w to \(w(k)\) SHOULD be as follows:

default_w : w(k) 0 : 0 1 : 0.0179 2 : 0.0391 3 : 0.0658 4 : 0.1038 5 : 0.25 6 : 0.3962 7 : 0.4342 8 : 0.4609 9 : 0.4821 10 : 0.5 11 ~ 15 : Reserved for future use

A default recon gain value of 0 dB is implied when there are no Parameter Block OBUs (with the same parameter_id defined in this ReconGainParamDefinition()) provided.

3.6.1. Parameter Definition Syntax and Semantics

Parameter definition classes inherit from the abstract ParamDefinition() class.

Syntax

abstract class ParamDefinition() {

leb128() parameter_id;

leb128() parameter_rate;

unsigned int (1) param_definition_mode;

unsigned int (7) reserved_for_future_use;

if (param_definition_mode == 0) {

leb128() duration;

leb128() constant_subblock_duration;

if (constant_subblock_duration == 0) {

leb128() num_subblocks;

for (i = 0; i< num_subblocks; i++) {

leb128() subblock_duration;

}

}

}

}

Semantics

parameter_id indicates the identifier for the Parameter Substream which this parameter definition refers to. There SHALL be one unique parameter_id per Parameter Substream.

parameter_rate specifies the rate used by this Parameter Substream, expressed as ticks per second. Time-related fields associated with this Parameter Substream, such as durations, SHALL be expressed in the number of ticks.

-

The parameter rate SHALL be a value such that the number of ticks per frame, computed as \[\frac{\text{parameter_rate} \times \text{num_samples_per_frame}}{\text{Audio Element sample rate}},\] is a non-zero integer.

param_definition_mode indicates whether this parameter definition specifies the duration, num_subblocks, constant_subblock_duration and subblock_duration fields for the parameter blocks with the same parameter_id.

-

When this field is set to 0, all of the duration, num_subblocks, constant_subblock_duration, and subblock_duration fields SHALL be specified in this parameter definition. None of the parameter blocks with the same parameter_id SHALL specify these same fields.

-

When this field is set to 1, none of the duration, num_subblocks, constant_subblock_duration, and subblock_duration fields SHALL be specified in this parameter definition. Instead, each parameter block with the same parameter_id SHALL specify these same fields.

duration specifies the duration for which each parameter block with the same parameter_id is valid and applicable. It SHALL NOT be set to 0.

constant_subblock_duration specifies the duration of each subblock, in the case where all subblocks except the last subblock have equal durations. If all subblocks except the last subblock do not have equal durations, the value of constant_subblock_duration SHALL be set to 0.

When constant_subblock_duration is not equal to 0,

-

num_subblocks is implicitly calculated as \[ \text{num_subblocks} = \left\lceil{ \frac{\text{duration}}{\text{constant_subblock_duration}}}\right\rceil. \]

-

If \(\textrm{num_subblocks} \times \text{constant_subblock_duration} > \text{duration}\), the actual duration of the last subblock SHALL be \[ \text{duration} - \left( \text{num_subblocks} - 1 \right) \times \text{constant_subblock_duration}. \]

When constant_subblock_duration is equal to 0, the summation of all subblock_duration in this parameter block SHALL be equal to duration.

num_subblocks specifies the number of different sets of parameter values specified in each parameter block with the same parameter_id, where each set describes a different subblock of the timeline, contiguously.

subblock_duration specifies the duration for the given subblock. It SHALL NOT be set to 0.

The values for duration, constant_subblock_duration, and subblock_duration SHALL be expressed as the number of ticks at the parameter_rate specified in the corresponding parameter definition.

3.6.2. Scalable Channel Layout Config Syntax and Semantics

The ScalableChannelLayoutConfig() class provides the configuration for a given scalable channel audio representation.

The ChannelAudioLayerConfig() class provides the configuration for a specific Channel Group.

This section specifies the syntax structures of the ScalableChannelLayoutConfig() and ChannelAudioLayerConfig() classes.

Syntax

class ScalableChannelLayoutConfig() {

unsigned int (3) num_layers;

unsigned int (5) reserved_for_future_use;

for (i = 1; i <= num_layers; i++) {

ChannelAudioLayerConfig channel_audio_layer_config(i);

}

}

class ChannelAudioLayerConfig(i) {

unsigned int (4) loudspeaker_layout(i);

unsigned int (1) output_gain_is_present_flag(i);

unsigned int (1) recon_gain_is_present_flag(i);

unsigned int (2) reserved_for_future_use;

unsigned int (8) substream_count(i);

unsigned int (8) coupled_substream_count(i);

if (output_gain_is_present_flag(i) == 1) {

unsigned int (6) output_gain_flags(i);

unsigned int (2) reserved_for_future_use;

signed int (16) output_gain(i);

}

if (i == 1 && [=loudspeaker_layout=] == 15)

unsigned int (8) expanded_loudspeaker_layout;

}

Semantics

num_layers indicates the number of Channel Groups for scalable channel audio. It SHALL NOT be set to zero and its maximum value SHALL be 6.

-

If loudspeaker_layout is set to Binaural, this field SHALL be set to 1.

channel_audio_layer_config is an instance of the ChannelAudioLayerConfig() class, which provides the i-th Channel Group's configuration, where i is the layer index provided as an input argument to this instance of the ChannelAudioLayerConfig() class.

loudspeaker_layout indicates the channel layout to be reconstructed from the precedent Channel Groups and current Channel Group. If parsers do not recognize a loudspeaker_layout for a particular layer, they SHOULD skip the channel_audio_layer_config for that layer and all subsequent layers.

In this version of the specification, loudspeaker_layout indicates one of the channel layouts listed below.

| loudspeaker_layout | Channel Layout | Loudspeaker Location Ordering | Reference |

|---|---|---|---|

| 0 | Mono | C | |

| 1 | Stereo | L/R | Loudspeaker configuration for Sound System A (0+2+0) of [ITU-2051-3] |

| 2 | 5.1ch | L/C/R/Ls/Rs/LFE | Loudspeaker configuration for Sound System B (0+5+0) of [ITU-2051-3] |

| 3 | 5.1.2ch | L/C/R/Ls/Rs/Ltf/Rtf/LFE | Loudspeaker configuration for Sound System C (2+5+0) of [ITU-2051-3] |

| 4 | 5.1.4ch | L/C/R/Ls/Rs/Ltf/Rtf/Ltr/Rtr/LFE | Loudspeaker configuration for Sound System D (4+5+0) of [ITU-2051-3] |

| 5 | 7.1ch | L/C/R/Lss/Rss/Lrs/Rrs/LFE | Loudspeaker configuration for Sound System I (0+7+0) of [ITU-2051-3] |

| 6 | 7.1.2ch | L/C/R/Lss/Rss/Lrs/Rrs/Ltf/Rtf/LFE | The combination of 7.1ch and the Left and Right top front pair of 7.1.4ch |

| 7 | 7.1.4ch | L/C/R/Lss/Rss/Lrs/Rrs/Ltf/Rtf/Ltb/Rtb/LFE | Loudspeaker configuration for Sound System J (4+7+0) of [ITU-2051-3] |

| 8 | 3.1.2ch | L/C/R/Ltf/Rtf/LFE | The front subset (L/C/R/Ltf/Rtf/LFE) of 7.1.4ch |

| 9 | Binaural | L/R | |

| 10 ~ 14 | Reserved for future use | ||

| 15 | Expanded channel layouts | Loudspeaker configurations defined in the expanded_loudspeaker_layout field |

Where C: Centre, L: Left, R: Right, Ls: Left Surround, Lss: Left Side Surround, Rs: Right Surround, Rss: Right Side Surround, Lrs: Left Rear Surround, Rrs: Right Rear Surround, Ltf: Left Top Front, Rtf: Right Top Front, Ltr: Left Top Rear, Rtr: Right Top Rear, Ltb: Left Top Back, Rtb: Right Top Back, LFE: Low-Frequency Effects

NOTE: The Ltr and Rtr of 5.1.4ch down-mixed from 7.1.4ch is within the range of Ltb and Rtb of 7.1.4ch, in terms of their positions according to [ITU-2051-3].

For a given input 3D audio signal with audio_element_type = CHANNEL_BASED, if the input 3D audio signal has height channels (e.g., 7.1.4ch or 5.1.2ch), it is RECOMMENDED to use channel layouts with height channels (i.e., higher than or equal to 3.1.2ch) for all loudspeaker_layouts.

-

Examples for RECOMMENDED list of channel layouts: 3.1.2ch/5.1.2ch, 3.1.2ch/5.1.2ch/7.1.4ch, 5.1.2ch/7.1.4ch, etc.

-

Examples for NOT RECOMMENDED list of channel layouts: 2ch/3.1.2ch/5.1.2ch, 2ch/3.1.2ch/5.1.2ch/7.1.4ch, 2ch/5.1.2ch/7.1.4ch, 2ch/7.1.4ch, etc.

NOTE: This specification allows down-mixing mechanisms (e.g., as specified in § 10.1.2.2 Annex A2.2: Down-mix Mechanism (Informative)) to drop the height channel if the output layout has no height channels. An example is down-mixing from 7.1.4ch to Mono, Stereo, 5.1ch or 7.1ch. Therefore, given an input 3D audio signal with height channels, an encoder may generate a set of scalable audio channel groups with layouts that do not have height channels.

output_gain_is_present_flag indicates if the output_gain information fields for the Channel Group are present.

-

0: No output_gain information fields for the Channel Group are present.

-

1: output_gain information fields for the Channel Group are present. In this case, output_gain_flags and output_gain fields are present.

recon_gain_is_present_flag indicates if the recon_gain information fields for the Channel Group are present in recon_gain_info_parameter_data.

-

0: No recon_gain information fields for the Channel Group are present in recon_gain_info_parameter_data.

-

1: recon_gain information fields for the Channel Group are present in recon_gain_info_parameter_data. In this case, the recon_gain_flags and recon_gain fields are present.

substream_count specifies the number of Audio Substreams. The sum of all substream_counts in this OBU SHALL be the same as num_substreams in this OBU. It SHALL NOT be set to 0.

coupled_substream_count specifies the number of referenced Audio Substreams, each of which is coded as coupled stereo channels.

Each pair of coupled stereo channels in the same Channel Group SHALL be coded in stereo mode to generate one single coded Audio Substream, also referred to as a coupled substream. Each non-coupled channel in the same Channel Group SHALL be coded in mono mode to generate one single coded Audio Substream, also known as a non-coupled substream.

-

Coupled stereo channels: L/R, Ls/Rs, Lss/Rss, Lrs/Rrs, Ltf/Rtf, Ltb/Rtb, FLc/FRc, FL/FR, SiL/SiR, BL/BR, TpFL/TpFR, TpSiL/TpSiR, TpBL/TpBR

-

Non-coupled channels: C, LFE, L, FC, LFE1

The order of the Audio Substreams in each Channel Group is specified in § 3.6.3.3 Ordering of Audio Substream Identifers.

output_gain_flags indicates the channels which output_gain is applied to. If a bit is set to 1, output_gain SHALL be applied to the channel. Otherwise, output_gain SHALL NOT be applied to the channel.

Bit position : Channel Name

b5(MSB) : Left channel (L1, L2, L3)

b4 : Right channel (R2, R3)

b3 : Left surround channel (Ls5)

b2 : Right surround channel (Rs5)

b1 : Left top front channel (Ltf)

b0 : Right top front channel (Rtf)

output_gain indicates the gain value to be applied to the mixed channels which are indicated by output_gain_flags, where each mixed channel is generated by down-mixing two or more input channels. It is computed as \(20 \times \log_{10}(f)\), where \(f\) is the factor by which to scale the mixed channels. It is stored as a 16-bit, signed, two’s complement fixed-point value with 8 fractional bits (i.e., Q7.8)([Q-Format]).

expanded_loudspeaker_layout indicates the expanded channel layout to be reconstructed from the Channel Group. This field SHALL only be present when num_layers = 1 and loudspeaker_layout is set to 15. Parsers SHOULD ignore Audio Element OBUs with an expanded_loudspeaker_layout that they do not recognize.

In this version of the specification, expanded_loudspeaker_layout indicates one of the expanded channel layouts listed below.

| expanded_loudspeaker_layout | Expanded Channel Layout | Loudspeaker Location Ordering | Reference |

|---|---|---|---|

| 0 | LFE | LFE | The low-frequency effects subset (LFE) of 7.1.4ch |

| 1 | Stereo-S | Ls/Rs | The surround subset (Ls/Rs) of 5.1.4ch |

| 2 | Stereo-SS | Lss/Rss | The side surround subset (Lss/Rss) of 7.1.4ch |

| 3 | Stereo-RS | Lrs/Rrs | The rear surround subset (Lrs/Rrs) of 7.1.4ch |

| 4 | Stereo-TF | Ltf/Rtf | The top front subset (Ltf/Rtf) of 7.1.4ch |

| 5 | Stereo-TB | Ltb/Rtb | The top back subset (Ltb/Rtb) of 7.1.4ch |

| 6 | Top-4ch | Ltf/Rtf/Ltb/Rtb | The top 4 channels (Ltf/Rtf/Ltb/Rtb) of 7.1.4ch |

| 7 | 3.0ch | L/C/R | The front 3 channels (L/C/R) of 7.1.4ch |

| 8 | 9.1.6ch | Loudspeaker location ordering of 9.1.6ch | The subset of Loudspeaker configuration for Sound System H (9+10+3) of [ITU-2051-3] |

| 9 | Stereo-F | FL/FR | The front subset (FL/FR) of 9.1.6ch |

| 10 | Stereo-Si | SiL/SiR | The side subset (SiL/SiR) of 9.1.6ch |

| 11 | Stereo-TpSi | TpSiL/TpSiR | The top side subset (TpSiL/TpSiR) of 9.1.6ch |

| 12 | Top-6ch | TpFL/TpFR/TpSiL/TpSiR/TpBL/TpBR | The top 6 channels (TpFL/TpFR/TpSiL/TpSiR/TpBL/TpBR) of 9.1.6ch |

| 13 ~ 255 | Reserved for future use |

Loudspeaker location ordering of 9.1.6ch: FLc/FC/FRc/FL/FR/SiL/SiR/BL/BR/TpFL/TpFR/TpSiL/TpSiR/TpBL/TpBR/LFE1

Where FLc: Front Left Centre, FC: Front Centre, FRc: Front Right Centre, FL: Front Left, FR: Front Right, SiL: Side Left, SiR: Side Right, BL: Back Left, BR: Back Right, TpFL: Top Front Left, TpFR: Top Front Right, TpSiL: Top Side Left, TpSiR: Top Side Right, TpBL: Top Back Left, TpBR: Top Back Right, LFE1: Low-Frequency Effects-1

For a given input 3D audio signal with an expanded channel layout defined in expanded_loudspeaker_layout, num_layers SHALL be set to 1 (i.e., it is a non-scalable channel audio element). Except 9.1.6ch Audio Element, it is RECOMMENDED to use such an Audio Element as an auxiliary Audio Element to be mixed with a primary Audio Element (e.g., TOA or 7.1.4ch) within a Mix Presentation. If parsers encounter a loudspeaker_layout = 15 for any layer other than the first layer, they SHOULD skip the channel_audio_layer_config for that layer and all subsequent layers.

The following channel layouts MAY be indicated using an existing loudspeaker_layout or expanded_loudspeaker_layout. The stereo pair FLc/FRc is indicated using Stereo (L/R), the stereo pair BL/BR is indicated using Stereo-RS (Lrs/Rrs), the stereo pair TpFL/TpFR is indicated using Stereo-TF (Ltf/Rtf), the stereo pair TpBL/TpBR is indicated using Stereo-TB (Ltb/Rtb), and FLc/FC/FRc is indicated using 3.0ch (L/C/R).

3.6.3. Scalable Channel Group and Layout

When an Audio Element is composed of \(G(r)\) number of Audio Substreams, its scalable channel audio representation is layered into \(r\) num_layers of Channel Groups.

-

The order of the Channel Groups in each Temporal Unit SHALL be same as the order of the channel_audio_layer_configs in ScalableChannelLayoutConfig().

-

The \(q\)-th Channel Group consists of \(G(q) - G(q - 1)\) number of Audio Substreams, where \(q = 1, 2, \ldots, r\) and \(G(0) = 0\).

-

Let the term "Audio Frames" mean the set of all Audio Frame OBUs (for this Audio Element) that have the same start timestamp. All Audio Frames in an IA Sequence SHALL have the same number of Audio Frame OBUs.

-

Parameter Block OBUs MAY be associated with Audio Frames.

.png)

Each Channel Group (or scalable channel audio layer) is associated with a different loudspeaker_layout. The IA decoder SHALL select one of the layers according to the following rules, in order:

-

The IA decoder SHOULD first attempt to select the layer with a loudspeaker_layout that matches the physical playback layout.

-

If there is no match, the IA decoder SHOULD select the layer with the closest loudspeaker_layout to the physical layout and then apply up- or down-mixing appropriately, after decoding and reconstruction of the channel audio. Sections § 10.1.2.2 Annex A2.2: Down-mix Mechanism (Informative) and § 7.6 Down-mix Matrix (Informative) provide examples of dynamic and static down-mixing matrices for some common layouts that MAY be used.

The relationship among all Channel Groups for the given scalable channel audio representation SHALL comply with § 3.6.3.2 Channel Group Format and the relationship among all channel layouts indicated by loudspeaker_layouts specified in an Audio Element OBU SHALL comply with § 3.6.3.1 Channel Layout Generation Rule.

3.6.3.1. Channel Layout Generation Rule

This section describes the generation rule for channel layouts for scalable channel audio.

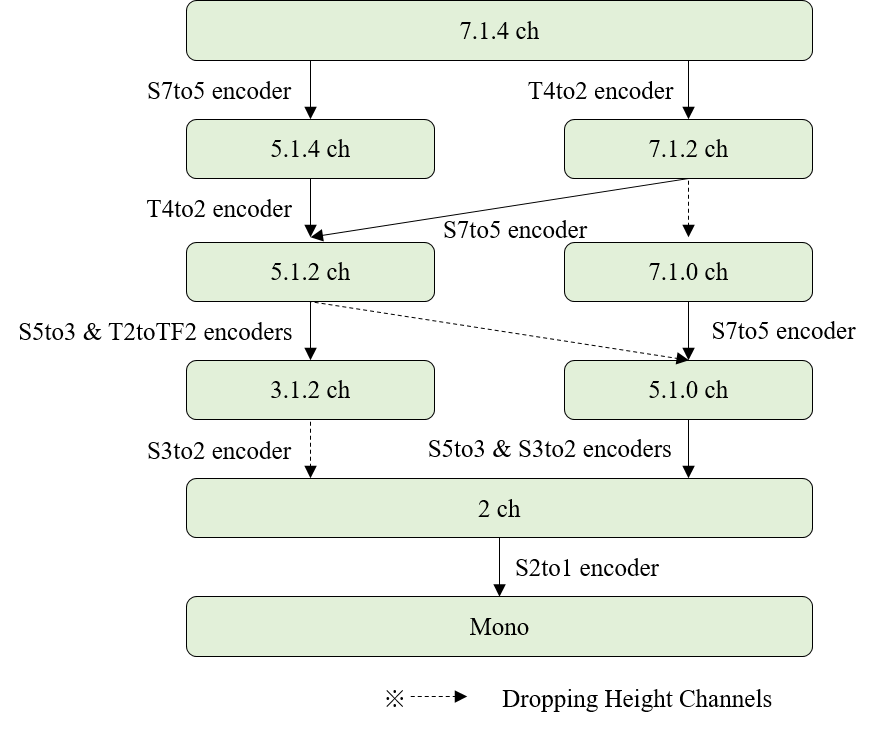

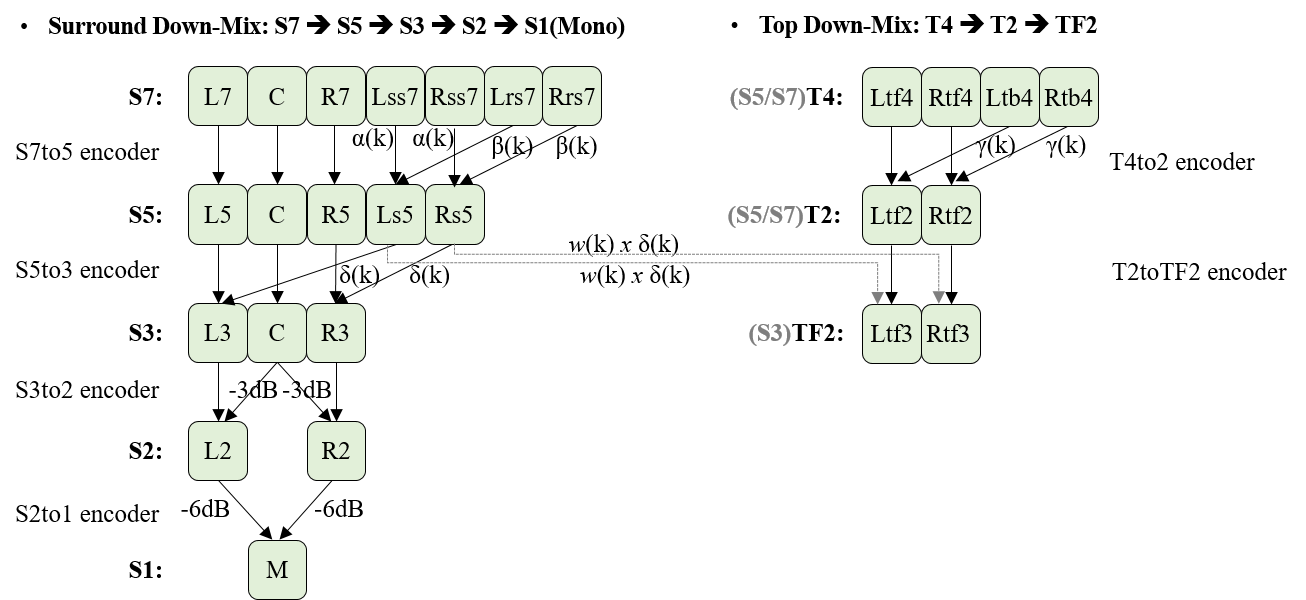

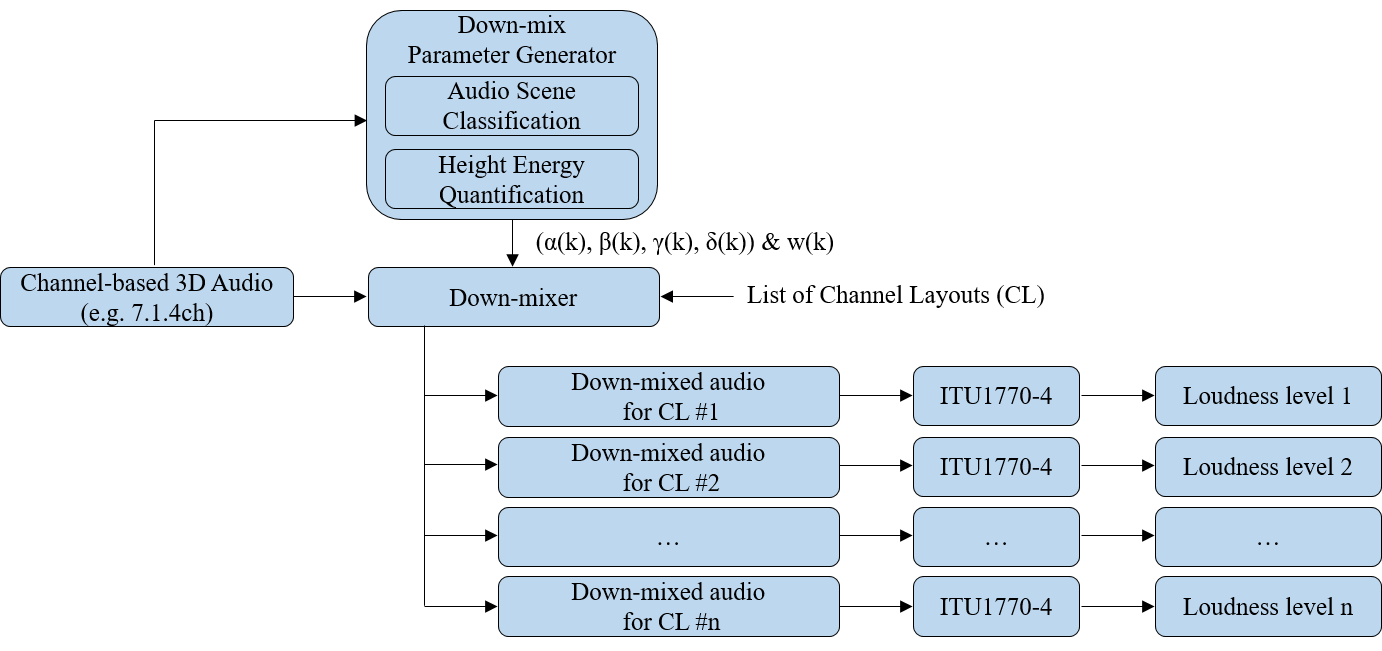

For a given channel layout (\(CL \text{#}n\)) of a channel-based input 3D audio signal, any list of CLs (\({CL \text{#}i: i = 1, 2, \ldots, n}\)) for scalable channel audio SHALL conform with the following rules:

-

\(\text{Xi} \le \text{Xi+1}\) and \(\text{Yi} \le \text{Yi+1}\) and \(\text{Zi} \le \text{Zi+1}\) except \(\text{Xi} = \text{Xi+1}\), \(\text{Yi} = \text{Yi+1}\) and \(\text{Zi} = \text{Zi+1}\) for \(i = n-1, n-2, \ldots, 1\), where the \(i\)-th channel layout \(CL \text{#}i = \text{Xi}.\text{Yi}.\text{Zi}\), \(\text{Xi}\) is the number of surround channels, \(\text{Yi}\) is the number of LFE channels, and \(\text{Zi}\) is the number of height channels.

-

\(CL \text{#}i\) is one of the loudspeaker_layouts supported in this version of the specification.

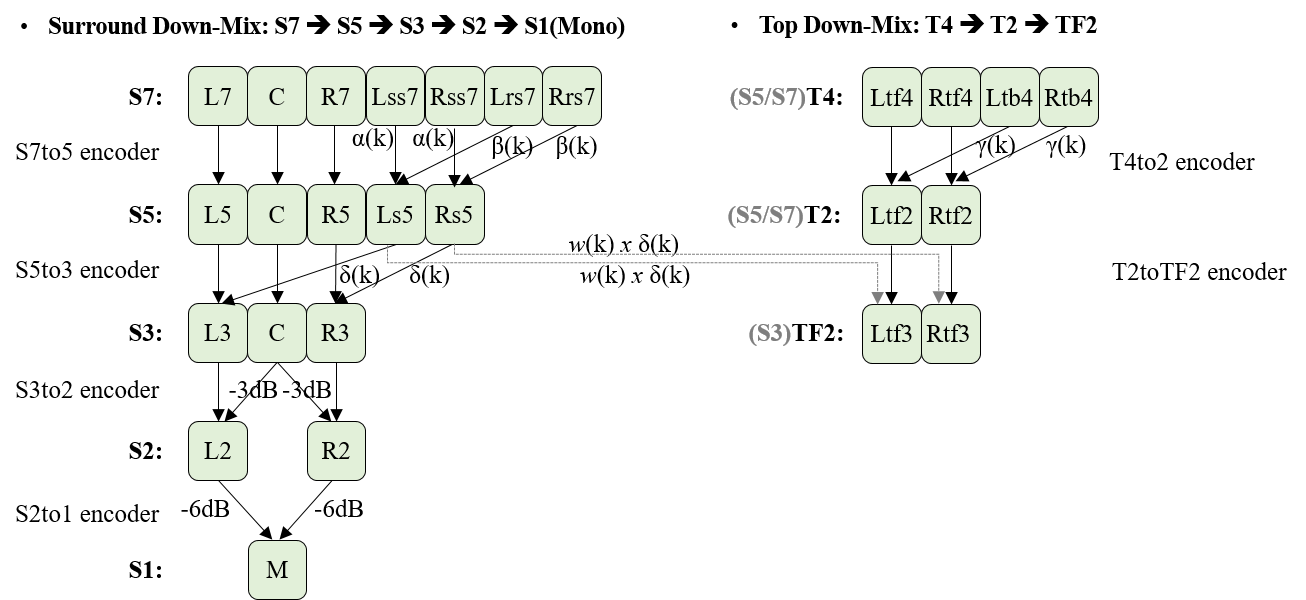

Scalable channel audio with num_layers \(> 1\) SHALL only allow down-mix paths that conform to the rules above, as depicted in the figure below.

3.6.3.2. Channel Group Format

The Channel Group format SHALL conform to the following rules:

-

It consists of C number of channels and is structured to \(r\) number of Channel Groups, where \(C\) is the number of channels for the input 3D audio signal.

-

Channel Group \(\text{#}1\) (as called BCG): This Channel Group is the down-mixed audio itself for \(CL \text{#}1\) generated from the input 3D audio signal. It contains a \(C1\) number of channels.

-

Channel Group \(\text{#}i\) (as called DCG, \(i = 2, 3, \ldots, n)\): This Channel Group contains (\(\text{Ci} – \text{Ci}-1)\) number of channels. \((\text{Ci} – \text{Ci}-1)\) channel(s) consists of as follows:

-

\((\text{Xi} – \text{Xi-1})\) surround channel(s) if \(\text{Xi} > \text{Xi-1}\) . When \(S_{\text{set}} = \{x \mid \text{Xi-1} < x \le \text{Xi}\} \) and \(x\) is an integer,

-

If 2 is an element of \(S_{\text{set}}\), the L2 channel is contained in this \(CG \text{#}i\).

-

If 3 is an element of \(S_{\text{set}}\), the Centre channel is contained in this \(CG \text{#}i\).

-

If 5 is an element of \(S_{\text{set}}\), the L5 and R5 channels are contained in this \(CG \text{#}i\).

-

If 7 is an element of \(S_{\text{set}}\), the Lss7 and Rss7 channels are contained in this \(CG \text{#}i\).

-

-

The LFE channel if \(\text{Yi} > \text{Yi-1}\).

-

\((\text{Zi} - \text{Zi-1})\) top channels if \(\text{Zi} > \text{Zi-1}\).

-

If \(\text{Zi-1} = 0\), the top channels of the down-mixed audio for \(CL \text{#}i\) are contained in this Channel Group \(\text{#}i\).

-

If \(\text{Zi-1} = 2\), the Ltf and Rtf channels of the down-mixed audio for \(CL \text{#}i\) are contained in this Channel Group \(\text{#}i\).

-

-

Where \(\text{Xi}.\text{Yi}.\text{Zi}\) denotes the channel layout in \(CL \text{#}i\), where \(\text{Xi}\) is the number of surround channels, \(\text{Yi}\) is the number of LFE channels and \(\text{Zi}\) is the number of height channels.

-

3.6.3.3. Ordering of Audio Substream Identifers

Let a particular Channel Group's Audio Substreams be indexed as \(\left[c, n_c\right]\), where a Channel Group format is described in § 3.6.3.2 Channel Group Format and

-

\(c\) is the Channel Group index, where \(c = 1, 2, \ldots, C\) and \(C\) is the number of Channel Groups.

-

\(n_c\) is the Audio Substream index in the \(c\)-th Channel Group, where \(n_c = 1, 2, \ldots, N_c\) and \(N_c\) is the number of Audio Substreams in the \(c\)-th Channel Group.

Then, the i-th audio_substream_id maps to a Channel Group's Audio Substreams as follows, where i is the index of the array:

\[ \left[ \left[ 1, 1 \right], \left[ 1, 2 \right], \cdots, \left[ 1, N_1 \right], \left[ 2, 1 \right], \left[ 2, 2 \right], \cdots, \left[ 2, N_2 \right], \cdots, \left[ C, 1 \right], \left[ C, 2 \right], \cdots, \left[ C, N_c \right] \right] \]

The order of the Audio Substreams in each Channel Group (i.e., the semantics of \(n_c\)) SHALL be as follows:

-

Coupled substreams come first and are followed by non-coupled substreams.

-

The coupled substreams for the surround channels come first and are followed by the coupled substreams for the top channels.

-

The coupled substreams for the front channels come first and are followed by the coupled substreams for the side, rear and back channels.

-

The coupled substreams for the side channels come first and are followed by the coupled substreams for the rear channels.

-

The Centre (or Front Centre) channel comes first and is followed by the LFE (or LFE1) channel, and then the L channel.

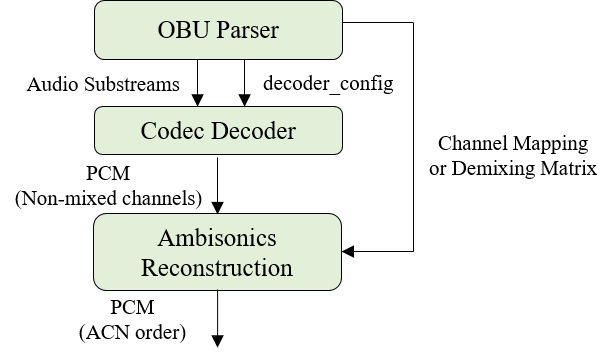

3.6.4. Ambisonics Config Syntax and Semantics

The AmbisonicsConfig() class provides the configuration for a given Ambisonics representation. This section specifies the syntax structure of the AmbisonicsConfig() class.

In this specification, the AmbiX format is adopted, which uses Ambisonics Channel Number (ACN) channel ordering and normalizes the channels with Schmidt Semi-Normalization (SN3D), both defined in [ITU-2076-2].

Syntax

class AmbisonicsConfig() {

leb128() ambisonics_mode;

if (ambisonics_mode == MONO) {

AmbisonicsMonoConfig ambisonics_mono_config;

} else if (ambisonics_mode == PROJECTION) {

AmbisonicsProjectionConfig ambisonics_projection_config;

}

}

class AmbisonicsMonoConfig() {

unsigned int (8) output_channel_count; // C

unsigned int (8) substream_count; // N

unsigned int (8 x C) channel_mapping;

}

class AmbisonicsProjectionConfig() {

unsigned int (8) output_channel_count; // C

unsigned int (8) substream_count; // N

unsigned int (8) coupled_substream_count; // M

signed int (16 x (N + M) x C) demixing_matrix;

}

Semantics

ambisonics_mode specifies the method of coding Ambisonics.

ambisonics_mode: Method of coding Ambisonics. 0 : MONO 1 : PROJECTION

If ambisonics_mode is equal to MONO, this indicates that the Ambisonics channels are coded as individual mono Audio Substreams. For LPCM, ambisonics_mode SHALL be equal to MONO.

If ambisonics_mode is equal to PROJECTION, this indicates that the Ambisonics channels are first linearly projected onto another subspace before coding as a mix of coupled stereo and mono Audio Substreams.

output_channel_count complies with channel count in [RFC-8486] with the following restrictions:

-

The allowed numbers of output_channel_count are \(\left( 1 + n \right)^2\), for \(n = 0, 1, 2, \ldots, 14\).

-

In other words, a scene-based Audio Element SHALL NOT include non-diegetic channels.

substream_count specifies the number of Audio Substreams. It SHALL be the same as num_substreams in this OBU.

channel_mapping complies with the "Channel Mapping" field for ChannelMappingFamily = 2 in [RFC-8486].

coupled_substream_count specifies the number of referenced Audio Substreams that are coded as coupled stereo channels, where \(\text{M} \le \text{N}\).

demixing_matrix complies with the "Demixing Matrix" field for ChannelMappingFamily = 3 in [RFC-8486] except that the byte order of each of the matrix coefficients is converted to big-endian.

A scene-based Audio Element has only one Channel Group, which includes all Audio Substreams that it refers to. The order of the Audio Substreams in the Channel Group SHALL conform to [RFC-8486].

3.7. Mix Presentation OBU Syntax and Semantics

The Mix Presentation OBU provides information on how to render and mix one or more Audio Elements to generate the final Immersive Audio output, with details provided in § 7.3 Mix Presentation. This section specifies the payload format of the Mix Presentation OBU.

An IA Sequence MAY have one or more Mix Presentations specified. The IA parser SHALL select the appropriate Mix Presentation to process according to the rules specified in § 7.3.1 Selecting a Mix Presentation.

A Mix Presentation MAY contain one or more sub-mixes. Common use cases MAY specify only one sub-mix, which includes all rendered and processed Audio Elements used in the Mix Presentation. The use-case for specifying more than one sub-mix arises if an IA multiplexer is merging two or more IA Sequences. In this case, it MAY choose to capture the loudness information from the original IA Sequences in multiple sub-mixes, instead of recomputing the loudness information for the final mix.

Syntax

class MixPresentationOBU() {

leb128() mix_presentation_id;

leb128() count_label;

string annotations_language[count_label];

string localized_presentation_annotations[count_label];

leb128() num_sub_mixes;

for (i = 0; i < num_sub_mixes; i++) {

leb128() num_audio_elements;

for (j = 0; j < num_audio_elements; j++) {

leb128() audio_element_id;

string localized_element_annotations[count_label];

RenderingConfig rendering_config;

MixGainParamDefinition element_mix_gain;

}

MixGainParamDefinition output_mix_gain;

leb128() num_layouts;

for (j = 0; j < num_layouts; j++) {

Layout loudness_layout;

LoudnessInfo loudness;

}

}

MixPresentationTags mix_presentation_tags;

}

Semantics

mix_presentation_id defines an identifier for a Mix Presentation. Within an IA Sequence, there SHALL be one unique mix_presentation_id per Mix Presentation. There SHALL be exactly one Mix Presentation OBU with a given identifier in a set of Descriptors. This identifier MAY be used by the application to select which Mix Presentation(s) to offer.

count_label indicates the number of labels in different languages.

annotations_language specifies the language which both localized_presentation_annotations and localized_element_annotations are written in. It SHALL conform to [BCP-47]. The same language SHALL NOT be duplicated in this array.

-

The i-th localized_presentation_annotations and localized_element_annotations SHALL be written in the language indicated by the i-th annotations_language, where i = 0, 1, ..., count_label -1.

localized_presentation_annotations provides a description for this Mix Presentation, and is informational metadata that an IA parser SHOULD refer to when selecting the Mix Presentation to use. The metadata MAY also be used by the playback system to display information to the user but is not used in the rendering or mixing process to generate the final output audio signal.

num_sub_mixes specifies the number of sub-mixes. It SHALL NOT be set to 0.

num_audio_elements specifies the number of Audio Elements that are used in each sub-mix of this Mix Presentation to generate the final output audio signal for playback. It SHALL NOT be set to 0. There SHALL be no duplicate values of audio_element_id within one Mix Presentation.

audio_element_id indicates the identifier for an Audio Element which this Mix Presentation refers to. Parsers SHOULD ignore the Mix Presentation OBU with an Audio Element that they don’t recognize.

localized_element_annotations provides a description for the referenced Audio Element, and is informational metadata that the playback system MAY use to display information to the user. It is not used in the rendering or mixing process to generate the final output audio signal.

rendering_config is an instance of the RenderingConfig() class, which provides the metadata required for rendering the referenced Audio Element.

element_mix_gain is an instance of the MixGainParamDefinition() class. It provides the parameter definition for the gain value that is applied to all channels of the referenced and rendered Audio Element signal, before being summed with other processed Audio Elements. The corresponding parameter data to be provided in Parameter Block OBUs with the same parameter_id is specified in the MixGainParamDefinition() class.

output_mix_gain is an instance of the MixGainParamDefinition() class. It provides the parameter definition for the gain value that is applied to all channels of the mixed audio signal to generate the audio signal for playback. The corresponding parameter data to be provided in Parameter Block OBUs with the same parameter_id is specified in the MixGainParamDefinition() class.

num_layouts specifies the number of layouts for this sub-mix on which the loudness information was measured.

loudness_layout is an instance of the Layout() class, which provides information about the layout that was used to measure the loudness information provided in this sub-mix.

loudness is an instance of the LoudnessInfo() class, which provides the loudness information for this sub-mix’s Rendered Mix Presentation, measured on the layout provided by loudness_layout.

The layout specified in loudness_layout SHOULD NOT be higher than the highest layout among the layouts provided by the Audio Elements. In other words, rendering from an Audio Element with the highest layout to the loudness_layout SHOULD NOT require an up-mix. In the case of a CHANNEL_BASED Audio Element with an expanded channel layout (i.e., loudspeaker_layout = 15), the Audio Element is considered to be providing the reference layout that it is a subset of. The exception is when the Audio Element is a zero-order Ambisonics or Mono channel; they MAY be rendered to Stereo. In this exception case, the loudness_layout for a zero-order Ambisonics or Mono channel Audio Element SHOULD NOT be higher than Stereo.

Each sub-mix SHALL include loudness for Stereo (i.e., a loudness_layout with the sound_system field = Loudspeaker configuration for Sound System A (0+2+0)).

-

If a sub-mix’s Rendered Mix Presentation is Mono, its loudness for Stereo SHOULD be measured on the Stereo signal generated using the equations: \[\text{L} = 0.707 \times \text{Mono}\] \[\text{R} = 0.707 \times \text{Mono}\]

If a sub-mix in a Mix Presentation OBU includes only one single scalable channel audio, it SHALL comply with the following:

-

num_layouts SHALL be greater than or equal to the num_layers field specified in its scalable_channel_layout_config, except in the following cases:

-

The highest loudness_layout specified in one sub-mix is the layout that was used for authoring the sub-mix. The exception is when the Audio Element is a zero-order Ambisonics or Mono channel.

-

The highest loudness_layout for a zero-order Ambisonics or Mono channel Audio Element is Stereo.

-

mix_presentation_tags is an instance of the MixPresentationTags() class, which provides informational metadata about a Mix Presentation, in addition to localized_presentation_annotations.

The MixPresentationTags() class MAY or MAY NOT be present in a Mix Presentation OBU. If the obu_size of a Mix Presentation OBU is greater than the size up to the end of num_sub_mixes loop, the MixPresentationTags() SHALL be present in the Mix Presentation OBU. For a given IA Sequence with multiple Mix Presentation OBUs, the MixPresentationTags() MAY be present in some Mix Presentation OBUs and MAY NOT be present in the other Mix Presentation OBUs.

3.7.1. Rendering Config Syntax and Semantics

The RenderingConfig() class provides information on how to render the referenced Audio Element. This section specifies the syntax structure of the RenderingConfig() class.

During playback, an Audio Element SHOULD be rendered using a pre-defined renderer according to § 7.3.2 Rendering an Audio Element.

Syntax

class RenderingConfig() {

unsigned int (2) headphones_rendering_mode;

unsigned int (6) reserved_for_future_use;

leb128() rendering_config_extension_size;

unsigned int (8 x rendering_config_extension_size) rendering_config_extension_bytes;

}

Semantics

headphones_rendering_mode indicates whether the input channel-based Audio Element is rendered to stereo loudspeakers or spatialized with a binaural renderer when played back on headphones. If the playback layout is a loudspeaker layout or the input Audio Element is not CHANNEL_BASED, the parsers SHALL ignore this field.

-

0: Indicates that the input Audio Element SHALL be rendered to loudspeaker_layout = Stereo.

-

1: Indicates that the input Audio Element SHALL be rendered with a binaural renderer.

-

2~3: Reserved for future use.

Parsers encountering a reserved value of headphones_rendering_mode SHALL ignore the Mix Presentation OBU that contains this rendering_config.

reserved_for_future_use SHALL be ignored by the parser. It is for future use.

rendering_config_extension_size indicates the size in bytes of rendering_config_extension_bytes.

rendering_config_extension_bytes represents reserved bytes for future use. Parsers that don’t understand these bytes SHOULD ignore them.

3.7.2. Mix Gain Parameter Definition Syntax and Semantics

The MixGainParamDefinition() class provides the parameter definition for any mix gains that need to be applied to a signal.

This section specifies the syntax structures of the MixGainParamDefinition() class.

Syntax

class MixGainParamDefinition() extends ParamDefinition() {

signed int (16) default_mix_gain;

}

Semantics

default_mix_gain specifies the default mix gain value to apply when there are no Parameter Block OBUs with the same parameter_id provided. This value is expressed in dB and SHALL be applied to all channels in the rendered Audio Element or the mixed audio signal. It is stored as a 16-bit, signed, two’s complement fixed-point value with 8 fractional bits (i.e., Q7.8)([Q-Format]).

3.7.3. Layout Syntax and Semantics

The Layout() class specifies either a binaural system or a sound system with pre-defined physical loudspeaker positions according to [ITU-2051-3]. This section specifies the syntax structure of the Layout() class.

Syntax

class Layout() {

unsigned int (2) layout_type;

if (layout_type == LOUDSPEAKERS_SS_CONVENTION) {

unsigned int (4) sound_system;

unsigned int (2) reserved_for_future_use;

}

else if (layout_type == BINAURAL or RESERVED) {

unsigned int (6) reserved_for_future_use;

}

}

Semantics

layout_type specifies the layout type.

layout_type : Layout type

0 - 1 : RESERVED

2 : LOUDSPEAKERS_SS_CONVENTION

3 : BINAURAL

-

A value of 0 or 1 is reserved for future use.

-

A value of 2 indicates that the layout is defined using the sound system convention of [ITU-2051-3].

-

A value of 3 indicates that the layout is binaural.

sound_system specifies one of the sound systems A to J as specified in [ITU-2051-3], 7.1.2ch, 3.1.2ch, Mono, or 9.1.6ch.

-

0: It indicates Loudspeaker configuration for Sound System A (0+2+0)

-

1: It indicates Loudspeaker configuration for Sound System B (0+5+0)

-

2: It indicates Loudspeaker configuration for Sound System C (2+5+0)

-

3: It indicates Loudspeaker configuration for Sound System D (4+5+0)

-

4: It indicates Loudspeaker configuration for Sound System E (4+5+1)

-

5: It indicates Loudspeaker configuration for Sound System F (3+7+0)

-

6: It indicates Loudspeaker configuration for Sound System G (4+9+0)

-

7: It indicates Loudspeaker configuration for Sound System H (9+10+3)

-

8: It indicates Loudspeaker configuration for Sound System I (0+7+0)

-

9: It indicates Loudspeaker configuration for Sound System J (4+7+0)

-

10: It indicates the same loudspeaker configuration as loudspeaker_layout = 6 (i.e., 7.1.2ch)

-

11: It indicates the same loudspeaker configuration as loudspeaker_layout = 8 (i.e., 3.1.2ch)

-

12: It indicates Mono

-

13: It indicates the same loudspeaker configuration as expanded_loudspeaker_layout = 8 (i.e., 9.1.6ch)

-

14 ~ 15: Reserved for future use

When a value for layout_type or sound_system is not supported, parsers SHOULD ignore this Layout() and any associated LoudnessInfo().

3.7.4. Loudness Info Syntax and Semantics

The LoudnessInfo() class provides loudness information for a given audio signal. This section specifies the syntax structure of the LoudnessInfo() class.

Each signed value is stored as Q7.8 fixed-point values([Q-Format]).

Syntax

class LoudnessInfo() {

unsigned int (8) info_type;

signed int (16) integrated_loudness;

signed int (16) digital_peak;

if (info_type & 1) {

signed int (16) true_peak;

}